Radar Data Processing Just Got Better

Researchers at the Georgia Tech Research Institute recently combined machine learning, field-programmable gate arrays (FPGAs), graphics processing units (GPUs), and a novel radio frequency image processing algorithm to streamline radar signal processing time and costs by two or three orders of magnitude. The advance could ultimately benefit real-time analysis of radar imaging data from potential enemy targets and autonomous vehicles and drones and might also be used for quantum sensing.

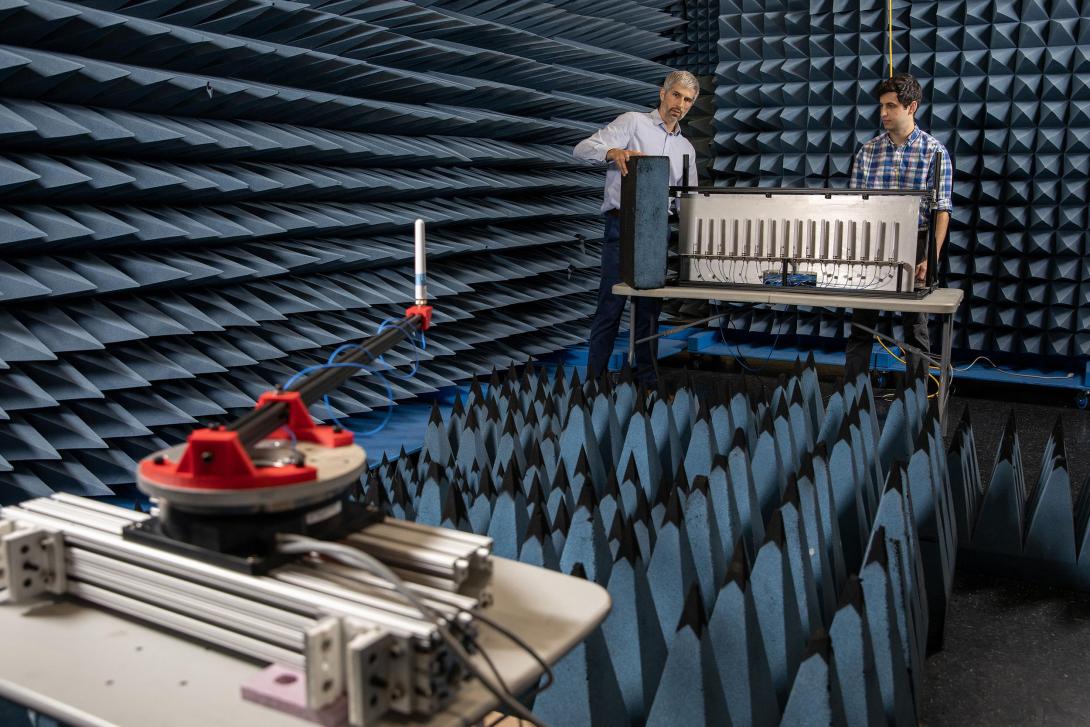

The capability has been tested on a 16-element digital antenna array. While the research project has so far focused on real-time imaging operations on vast amounts of data, it supports the conventional beamforming operations also done by phased arrays, according to a Georgia Tech press release. The release explains that the number of elements on phased array antennas continues to grow and so does the amount of data to be processed.

The amount of data coming from numerous radar sources, such as autonomous vehicles and drones, can be overwhelming, but pushing the processing to the front where the raw data pours in allows the rapid extraction of relevant data. Hundreds or even thousands of subarrays can generate terabytes of data every second, according to Ryan Westafer, a principal research engineer at the Georgia Tech Research Institute. But, of course, not all of that data is useful. “You’re throwing data away as you go. You’re saying I’m looking for this, and I’m not looking for that, and everything is a decision along the way. Every bit is a decision. And every bit you choose to ignore is another type of decision.”

The research team’s solution gains efficiency in part by processing on the “edge” or on the antenna subarrays, making data available immediately rather than waiting for it to be processed by backend servers. “This is processing that typically was done in rack-based computing in the past. So that’s the transition: new chipsets and software and firmware, and some of the mapping that we were demonstrating to take those high-level algorithms and map them down into highly efficient, high-speed computations was the focus,” Westafer told SIGNAL Media.

The research might also be used for quantum sensors, such as chip-scale atomic clocks, Westafer suggested. “These sensors, the methodology that we’ve applied, are not limited to even conventional arrays. We’re very interested in the longer-term convergence. We’re looking at arrays of quantum sensors,” he said. “It’s higher sensitivity; it gives us a wider field of view, so we are looking, architecturally, at these longer-term horizons.”

Various components of the team’s solution are integrated into a system on a chip. “Radio frequency data converters, and the general purpose application processing unit, real-time processing unit, it’s all available inside a single chip. And then we had a graphics processing unit co-located right there but not on-chip yet,” Westafer explained. “The highest rate processing is done by the FPGA, and one of the things we used was a high-level synthesis pathway to map the initial operations on the data directly in that. So, we could divide up our tensor operations that we need to perform.” The term “tensor operations” refers to complex mathematics.

The technology also reduces the need for additional coding, such as hardware description language, a specialized computer language associated with electronic circuits. “The very first reduction of the data is occurring in the FPGA, and that is prescribed by a high-level synthesis pathway that enables us to more readily adapt the type of algorithm that we’re implementing. So, if we want to change the operation, we’re not going in and rewriting a bunch of FPGA hardware description language, HDL code, but we’re actually able to express it in high-level language, high-level synthesis, and direct that into the FPGA,” Westafer explained.

Westafer said that other researchers in industry and elsewhere are working on similar capabilities tailored to specific needs and requiring a lot of effort to implement. “They are usually niche developments for specific applications. There’s lots of tuning and training—and that’s actually part of the point—there’s lots to do after you’ve implemented it,” he said. “But I think this lowers the barrier to implementation.”

He also emphasized that the research team built the capability in a short time. They spent about a year on simulations and calculations and about six months “demonstrating it with a full array and over-the-air measurements,” among other actions. The researchers built on advances made by industry, which “enabled us to work that whole range of all those types of processing within a short timeframe” for a capability that “didn’t exactly exist before.”

The research offers a glimpse into the future, Westafer indicated. “The future people would like to see is that you could push those adaptations into the sensors, into the hardware, as reprogramming versus having to have engineers recode things at that level and then stop testing, that sort of thing. We didn’t go all the way there, but we showed that we were able, in a tight timeframe, to implement these new types of algorithms like this in the hardware.”

Westafer suggested the technology could soon be tested in the field by either adapting or replacing an automotive radar and assessing the advantages, although no plans are currently in place. “I think [technology readiness level] 6 experimentation is on the horizon for us and for others. It would take a few months to get something to a particular exercise and then prepare to go out there and do that and get all the requirements and everything, so allow about a year to take something to the [technology readiness level] 6 testing.”

Although the capability could be useful commercially, Georgia Tech personnel are currently focused on using it for further research. “We don’t develop these things and have them go to waste. We are currently working with this technology for various programs,” he said. “So, we’re, so we’re using it, but I would say we’re not pushing it into a specific single mission or use case. I’m not pushing it from a commercialization perspective.”

He added, however, that the research team is talking to the Army and to the Defense Advanced Research Projects Agency (DARPA) about the technology. The research actually started with a DARPA-funded Tensors for Reprogrammable Intelligent Array Demonstrations (TRIAD) program, which was one part of a bigger program known as Artificial Intelligence Exploration, which was publicly announced in 2018.

The Artificial Intelligence Exploration program was a DARPA-wide effort designed to help the United States remain ahead of competing nations in artificial intelligence research. It offered up to $1 million for a variety of 18-month research efforts. TRIAD, specifically, was designed to allow phased array antennas to pick up signals from only one direction and ignore signals from other directions.

Comments