The Great Overcomplication

Winner of the 2024 The Cyber Edge Writing Contest.

Producers and consumers of technology must make a drastic and fundamental change to mitigate current and future cyber threats.

In the last 20+ years, we have all witnessed an explosion of information technology capabilities permeating our personal and professional lives. We have also seen a corresponding escalation of cyber threats and attacks across all those capabilities and even across the tooling used to protect against attacks. The adversary shows no sign of slowing down or getting any less creative and insidious with their malicious actions, and every new technology we field is compromised soon after or even before wide adoption occurs.

But we always act in the way our humanity compels us: We jump head-first into new technology with minimal forethought and then rush to apply bandages to fix problems that likely shouldn’t exist in the first place. Those bandages, seemingly, always involve more, faster and unavoidably more complex technology. But every time we build a complex system to solve a problem, we rarely pause to consider whether it warrants the level of complexity we are introducing.

We are drowning in complexity, yet we keep doing the same thing and expecting a different outcome. But with every passing year, we become more exposed and more vulnerable, not less. We keep hinging our hopes on a magic cyber bullet that will come and save us from ourselves, but frankly, the chances of that seem to be next to none. We keep layering technology on top of technology, hoping we can plug all the leaks, but at the same time, we create even more and harder-to-find vulnerabilities due to the complexity being created.

If we have any hope of getting out from under this situation that threatens us all, we must critically evaluate whether many of the problems we are trying to solve are self-imposed and caused by this form of technical debt: malignant patterns of overcomplication that we have fallen into during this ongoing period of technological adolescence.

My call to action is for producers and consumers of technology to internalize that what we’ve been doing isn’t working. We need to slow down and field/rely on less technology, not more. We must discourage the unnecessary application of technology, especially that which imposes needless complexity for merely incremental benefits and hasn’t been vetted by yet-to-be-invented security-hardening standards. I will further propose that technology producers be accredited to professional standards like doctors, lawyers and pilots since so much technology has direct life and safety implications. These standards should be enforced with real consequences for malpractice: if one loses their accreditation, they would no longer be allowed to produce or distribute technology.

I hope that this mindset will force us to deliberately engineer and refactor systems with a changed perspective that makes us confront the reality that complexity itself is a tangible resource that must be managed and considered a liability that must be minimized since complexity is cybersecurity’s worst enemy.

These are not simple asks. A vastly complex geopolitical and economic web of interdependencies seems to mandate infinite corporate growth and technological consumption and production. It is easy to say, “Slow down and make fewer, better things,” but far harder to actually do. Yet, to truly address the future cyber threat landscape, I don’t see any pathway to success other than reducing our attack surface by deliberately limiting ourselves to what technology we widely use.

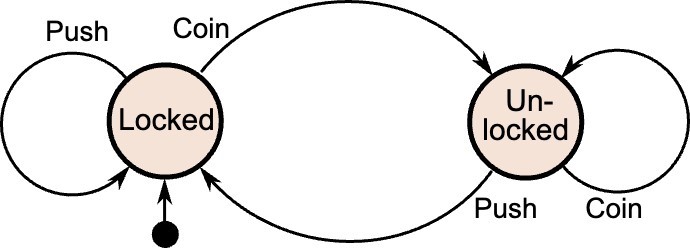

Complexity can be well represented by the “finite state machine” construct, an abstraction of a system that represents every possible state/input/output, like the example of a coin-operated turnstile (from https://en.wikipedia.org/wiki/Finite-state_machine):

With this simple example in mind, consider what the state machine would look like for a multicloud, microservices-based software application with a distributed, synchronized, active/active data store running on heterogeneous hardware platforms, with artificial intelligence/machine learning (AI/ML) capabilities layered in. With so many variables, inputs/outputs and interdependent conditions, I posit that this architecture, for all practical purposes and certainly in cybersecurity terms, is an infinite state machine. The attack surface of such a system is also almost infinitely deep and broad.

The modern world runs on these vastly complex state machines, and we’ve seemingly done a pretty good job of managing that complexity since most well-architected systems do not catastrophically fail most of the time. But how comfortable are we that systems this complex are predictable and secure enough to earn the trust that we put in them, especially systems that have direct effects on life, health and safety? Whether subtle or catastrophic, failures are becoming increasingly difficult to troubleshoot and recover from without additional levels of complexity to provide deep tracing and resilience/recovery mechanisms. The more complex a system, the larger, deeper and more subtle the attack surface becomes, as evidenced by some of the recent exquisite cyber attacks, especially the accelerated discovery of protocol and hardware-level vulnerabilities.

Complexity exists and is negatively impactful, no matter how abstracted it is from a consumer of technology. Without denying its benefits, the public cloud, unfortunately, has made it very easy to build vastly complex systems. For an end-user, this can be as simple as clicking a few buttons in a hyperscaler’s web console, but the results of those clicks can be vastly complex. Infrastructure as a Service/Platform as a Service/Software as a Service abstractions make it far too easy to ignore that complexity, but it exists nonetheless, no matter where one is on the shared responsibility model. It must be managed and understood by every stakeholder in the system, especially when that system fails or is breached.

Another example of this complexity problem is AI/ML. Our brains are not suited for rapid context switching and correlation of complex data and events, so we created AI/ML to help us make sense of all the vast amounts of data we choose to collect. We collect an obscene amount of excessive and unnecessary data because there are no real consequences for doing so, and the public either doesn’t understand the risks or is resigned to the fact that this practice is inevitable. It is undeniable that there are benefits of responsible and well-architected data analytics, but the negative results are equally undeniable.

Any time analytics are introduced into a system, especially highly complex, nondeterministic mechanisms with near-infinite states, the question must be asked whether this complexity is worth the benefits and whether it introduces liabilities. For any system being considered, we need to take a breath and ask whether less data and more compartmentalized processes can reduce the scale of a problem and thus obviate the need for a particular instance of AI/ML entirely. We are starting to see the unintended consequences of knee-jerk use of this technology and a myriad of problems being created that require even more complexity to try to solve.

These consequences appear faster every day, whether involving data leakage, unintended/intentional malinformation or automated systems used in offensive cyber operations. AI/ML-based systems are very good at finding subtle things that humans can’t, which is yet another reason for building systems at a complexity level that is readily understandable by humans. We are almost making it easy for attackers to take advantage of the noise in systems that we ourselves create. And while we do this, we implement protections designed for legacy attackers and hope they will be effective. Our protections are designed to repel muskets, and our adversaries are wielding automatic weapons—more accurate, powerful and faster than we can hope to defend against.

As the zero-trust cybersecurity movement continues to evolve and forces us to take a cold, hard look at how we have designed systems and data architectures, I fear that the problems revealed by unnecessary complexity will seem overwhelming. But at the same time, I am hopeful that these efforts will have the secondary benefit of opening our eyes and motivating us to build more elegant, manageable and securable systems. As we build new systems, refactor existing ones and deeply inspect them through a secure-by-design lens, we have the opportunity to improve by taking a step back and asking fundamental questions such as:

- Is a system’s existence even necessary?

- What is the simplest and most elegant way to provide core capabilities?

- Do the benefits of a system warrant the complexity necessary to maintain and secure it?

- Are changes or modernizations to a system substantially valuable and are they worth the risks?

- Is the state machine of this system understood to a level of rigor appropriate for the system’s purpose/criticality?

I do not see a way out of this security quagmire without forcing ourselves to pause and ask those hard questions at every step of systems design, even if it slows us down in the short term and limits what technology we produce and deploy. In the early days of computing, we built exquisitely elegant systems because resource constraints forced us to. We need to focus again on the practice of elegant systems design that will translate into faster, more reliable and more secure solutions to relieve us from fighting the storm of complexities and vulnerabilities that threaten to overwhelm us all.

Sam Richman is an associate principal solution architect at Red Hat, specializing in zero trust and DoD ecosystem partnerships. He has more than 20 years of experience in U.S. government enterprise IT, both in the federal service and in multiple industry roles, supporting civilian and Defense Department initiatives such as cybersecurity, agile architecture, application delivery and data integration/analytics. Richman was also a winner in the 2021 The Cyber Edge Writing contest.

Comments