Let’s Get Real: Learning To Co-exist With Machine Learning

Machine learning (ML) incites both anticipation and anxiety, but by learning to join forces with ML and developing a method for training and usage, humans and ML can form a symbiotic co-working relationship.

While at the recent AFCEA TechNet Cyber event in Baltimore, I asked an Army colonel why machine learning was so top of mind for him. He replied, “It allows me to punch above my weight level.” We all love our force multipliers.

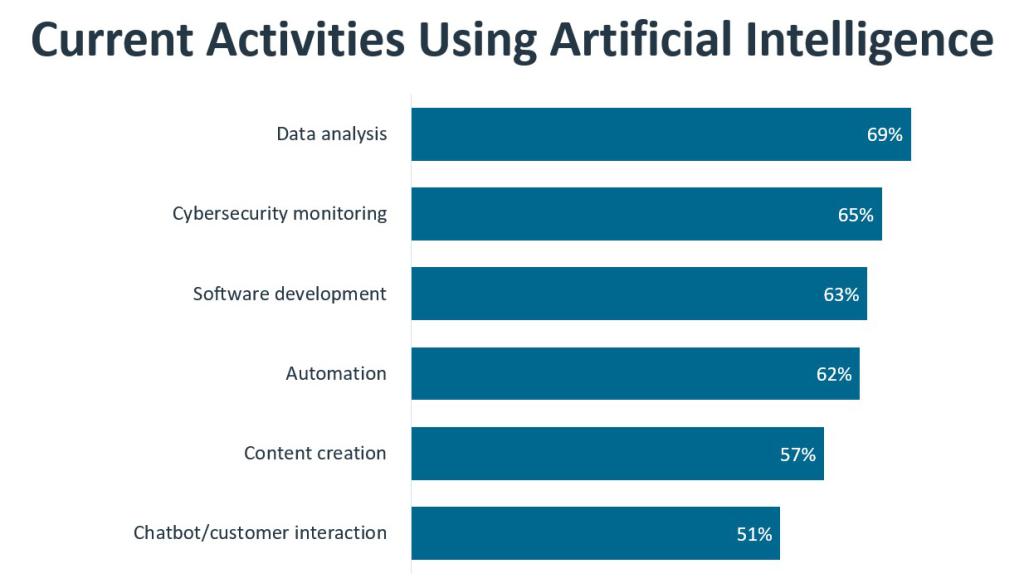

But we also like ML because it:

- Eliminates repetitive tasks. ML is good at “brute force” analysis. Recently, I spoke with a cybersecurity analyst who told me that she welcomes her ML co-worker because it quickly sifts through gigabytes of boring log files, allowing her to identify important anomalies.

- Identifies trends. A properly trained ML solution can identify key elements of an image or words in a video, document or conversation, then identify anomalies most humans can’t find. In cybersecurity, AI often helps model threats.

- Offers enforcement. A good ML engine can provide “guardrail services.” It can identify where an information technology (IT) worker has made a choice that created a bad user experience or cybersecurity implications and then suggest alternatives.

But AI can also cause anxiety in those who use and want to use it.

First, it may be hard to admit, but human beings still tend to treat technology as a “mystery box.” Arthur C. Clarke once said, “Any sufficiently advanced technology is indistinguishable from magic.” Let’s face it: People love the svelte mystery of gadgets. Consider the latest smartwatch. It’s no wonder fast-moving, lateral-thinking technology such as machine learning might seem like magic.

So, when a major artificial intelligence advance occurs, our relatively superficial collective understanding of tech tends to catch up with us, and we get rattled. Even strong techies tend to treat any seemingly new technology in this way. Combine this with the power of artificial intelligence (AI), and you’ve got serious anxiety.

Second, organizations often fall prey to the “garbage in, garbage out” problem. Good technologists know that bad input in the hands of a powerful ML engine can cause serious problems. I asked a chief information officer (CIO) from a large U.S.-based healthcare company if she was worried about ML. She replied that she has enough to worry about from slow-working human beings who make mistakes, not to mention the errors artificial intelligence can create.

Third, we haven’t trained our workers on how to work and play well with their ML co-workers.

Increasingly, we’ll need to interface elegantly with our ML co-workers. This means that we need to develop a laser-like focus on exactly how humans think as they receive information from machine-driven sources. I call the space where AI/ML leaves off and humans pick up “an interstice.” The interstice is a space where humans and machines can cooperate to create unique information. We need to work hard on this space to get it right. Two critical elements that will improve interstitial communication will be upskilling people. That upskilling involves imbuing workers with more technical skills and also helping them engage in better dialogues with their AI co-workers.

If anyone wants to use AI to engage in machine-assisted decision-making, they’ll need to take a step back to the future, as it were, and consider an old but vital activity: the Socratic method. The Socratic method is a cooperative dialogue between two people, sometimes called the teacher and the student. The intended result of the dialogue is enlightenment—what we now might refer to as “AI-enhanced decision-making.”

If properly trained, ML becomes the teacher. But the Socratic method works only if both sides of the equation fulfill four conditions. First, they both need to interact with each other. Second, they each need to listen carefully. Third, both parties need to answer as honestly as possible. Fourth, at least one party needs to have enough smarts to benefit from the dialogue.

Sometimes people don’t have the foundational knowledge to engage in a proper dialogue. Therefore, they can’t take advantage of what AI has to offer. So, proper AI implementation involves training humans to ensure they have an adequate technical and interpersonal foundation.

As far as technical knowledge for IT workers is concerned, there’s some work to do. I’ve spent time with corporate and government leaders in various countries over the last 18 months. Many have told me in one way or another that a number of their new IT workers simply don’t have enough foundational learning. CIOs and chief information security officers from New Jersey to the U.S. Department of Defense to Japan and Thailand have told me that their workers don’t know enough about endpoints, application programming interfaces, fundamental protocols and security controls. If we want to leverage AI, then we have to create a more savvy workforce, which involves more than just learning about tech.

True savvy also involves the ability of an individual to ask the right questions. Increasingly, workers will be asked to formulate thoughtful input. This is ironic since one of the reasons ChatGPT captured the imagination of so many is that people felt that they could obtain unique information without having to use advanced search techniques. But, if we really want to leverage AI, we’ll need to train people to pose more thoughtful questions based on true technological savvy.

Many organizations don’t realize the following two things about a successful ML implementation:

- The sheer amount of training data necessary to “jump-start” the project.

- The need to continually curate data used to retrain the application to avoid using incomplete or biased data.

IT leaders describe several major hurdles for AI adoption. Many worry about the cost of the operating infrastructure, and others feel that building the appropriate data sets is a problem.

This is one reason why organizations worldwide have succumbed to bias involving serious issues with discrimination.

Curation and iteration are the two ways that organizations overcome bias. Few organizations, however, have the appetite for either. This is the primary reason so many forms of bias continue to exist. In many cases, “iteration” means that they skip vital steps involving proper AI training, as well as customer experience and cybersecurity, and have no real plan to resolve the technical debt they incur.

Proper data curation involves continually training the machine learning engine and ensuring that the incoming data doesn’t offer opportunities for bias to develop. Therefore, any organization that uses a machine learning tool must realize that it has entered the data curation business.

It’s important to focus on industry-strength ML implementations. The most critical implementations combine a long-established technology with ML. For example, Red Hat’s Lightspeed project combines the long-standing Ansible automation platform with IBM’s Watson. Amazon’s Rekognition combines ML with video and image analysis services. Spam filter products have been using ML—specifically the Naïve Bayes algorithm—for decades.

Recently, the Department of Defense initiated the new 8140 program, which is a talent management program designed to ensure military personnel and contractors have appropriate and necessary skills. They are currently training a machine learning engine that will scan and evaluate the terabytes of courseware and certification data that will be submitted.

AstraZeneca, for example, uses machine learning to create and validate molecules or biologics to attack disease targets. This helps them develop a specific medicine or therapy more quickly. They also engage in ML-driven model development for their sales force.

They train their engine to read through customer relationship management data to help healthcare providers suggest medications and therapies that might have the greatest chance of success.

All these players are engaged in all the activities necessary for solid ML implementation, including adequate training, curation of data and addressing root causes that lead to issues such as bias.

We have been applying the old 80/20 rule called the “Pareto Principle” for years. Software developers have known for years that 80% of the code for a software application can be written in 20% of the time allotted to create the entire application. Now, developers and IT workers will use ML to do the repetitive work and focus on providing increasingly unique parts of the project—the 20%.

Success, therefore, will occur when workers draw information from many ML-driven resources that complete the 80% more quickly. This will allow workers more time to think unconventionally and refine their place in the OODA (observe-orient-decide-act) loop. Humans, therefore, don’t need to do repetitive tasks or even retain or capture data. Instead, they need the ability to work with their ML co-workers to think independently and, as necessary, come up with unexpected solutions. This isn’t a new 80/20 rule, but a new way to make humans more efficient players.

Finally, success will happen when machine learning fuels our work rather than determines it. We need to remember that we are increasingly the directors and curators. This realization will enable us to get real and use our new data and information sources wisely.

James Stanger has consulted with corporate and government leaders worldwide about security, open source and workforce development for more than 25 years. An award-winning author, blogger and educator, Stanger is currently chief technology evangelist at CompTIA.

The opinions expressed in this article are not to be construed as official or reflecting the views of AFCEA International.

Comments