NIST Program Helps Unmask Facial Recognition Spoofers

Facial recognition technology is becoming more common but can sometimes be spoofed by someone wearing a mask, for example. Or images can present other challenges, such as when subjects close their eyes.

Vendors around the world are developing algorithms to detect such challenges and can send their technology solutions to the U.S. National Institute of Standards and Technology (NIST) for evaluation. Researchers run the algorithms against a set of photos only NIST can access and compare the results against an array of benchmarks, Patrick Grother, a NIST computer scientist, explained in a recent interview. “We have compiled software libraries, and they come to us, and we run [the algorithms] on a set of images that are sort of hidden away, that are not accessible to developers or anybody else. And then we compute biometric performance statistics by running those algorithms on those images, and we publish reports. This can be done at quite a large scale. It gives us repeatability and fairness and statistical robustness.”

The research provides information about face recognition technologies and the particular implementation of those capabilities. The first is of interest to end users or policymakers, and the second to developers and acquisition personnel. In the national security and defense arena, the research also could be important for operations.

The work recently resulted in two published studies: one on software designed to detect spoof attacks, the other on software’s ability to identify potential problems with a photograph or digital image, such as those captured for driver licenses or passports. Together, the two reports provide insights into how effectively modern image-processing software performs face analysis, according to a NIST press release.

NIST distinguishes between face recognition and face analysis. The former attempts to identify a person based on an image. The latter aims to characterize an image, such as flagging pictures that are themselves problematic—whether because of nefarious intent or simply due to mistakes in the photo’s capture, the press release explains.

Problematic images might include simple mistakes such as closed eyes or blurriness, or deliberate spoofing attempts such as someone wearing a mask resembling another person. “These are the sort of defects that some developers claim their software can detect, and the FATE [Face Analysis Technology Evaluation] track is concerned with evaluating these claims,” Mei Ngan, another NIST computer scientist, explained in the press release.

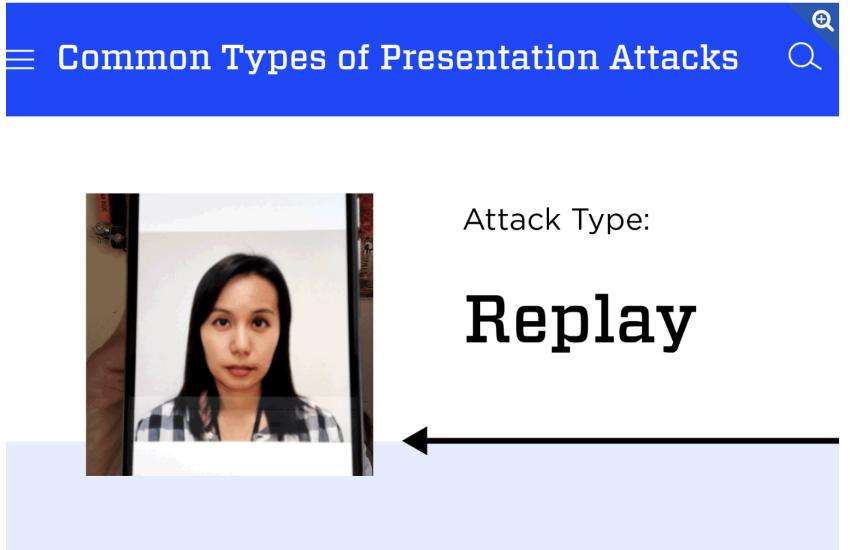

The first study evaluated 82 algorithms submitted by 45 developers. The researchers assessed the software’s ability to detect impersonation, in which someone attempts to look like someone else, and evasion, in which they try not to look like themselves.

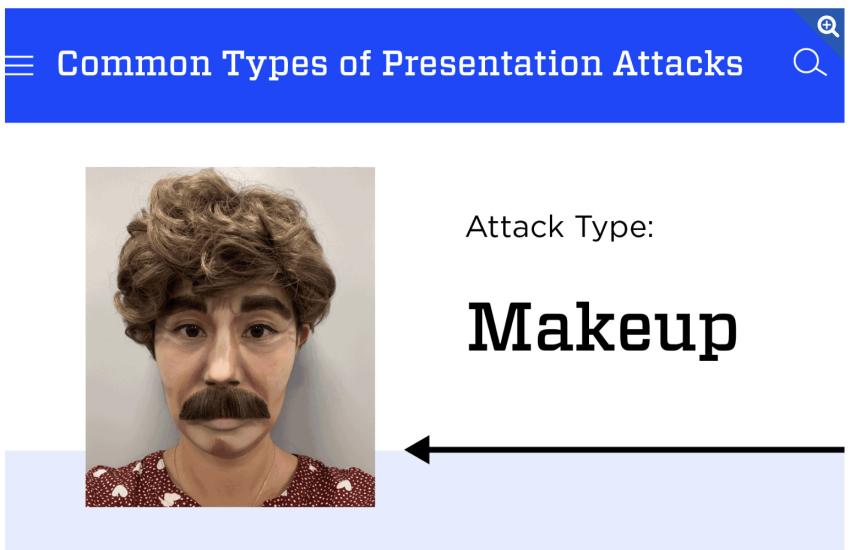

The team evaluated the algorithms with nine types of presentation attacks, including a person wearing a sophisticated mask designed to mimic another person’s face and other simpler attacks, such as holding a photo of another person up to the camera or wearing an N95 mask to partially cover the face.

For the second study, which focused on image quality, the team received seven algorithms from five developers and used 20 different quality measures, including underexposure and background uniformity, all based on internationally accepted passport standards.

Researchers said that all the algorithms showed mixed results. Each had its strengths, doing better on some of the 20 measures than others. “That’s generally the case across all of our benchmarks, but there’s a spectrum of performance across the industry,” Grother said. “If a dozen developers send us an algorithm, we’ll see a range of performance. Some are better at it than others.”

He added that seeing others perform better can motivate those who don’t do so well. “If one developer sees much better performance from another, then it could inspire them to know that better performance is possible. And then they could go back to the drawing board and try to figure out how to achieve that. This gives the developers insight into their capability. And then they try to employ new and better methods to improve.”

The results will inform a standard that NIST is helping to develop—ISO/IEC 29794-5, which will lay out guidelines for the quality measures that an algorithm should check.

The two studies are the first on the subject since August, when NIST divided its Face Recognition Vendor Test program into two tracks, Face Recognition Technology Evaluation (FRTE) and Face Analysis Technology Evaluation (FATE). Efforts involving the processing and analysis of images, as the two new publications do, now are categorized under the FATE track. Technology tests on both tracks are meant to provide information on the capabilities of algorithms to inform developers, end users, standards processes, and policy and decision-makers.

Researchers use different benchmarks in each track for testing images or videos. For face recognition, the first benchmark is one-to-one verification, which simply confirms the person is who they claim to be by matching their face to the image, which is useful for passports and licenses and for phones or computers using face recognition for access. The second benchmark for face recognition is known as one-to-N search or 1:N search. “One-to-N search is like a Google search, except you upload a face and you search it against some database. That’s what happens in law enforcement. It happens with passport deduplication, driving license deduplication.”

The third benchmark aims to solve the twins disambiguation challenge. “Twins cause some false positives in systems, meaning your twin could open your phone,” Grother explained. “If you’re doing biometrics, you want unique identity, and if two twins can unlock a phone, then that’s not quite unique.”

For the face analysis track, the benchmarks focus on image quality, which involves both quality detection and quality summarization. It includes purely accidental issues, such as blurriness or a person wearing a hat and sunglasses. Other cases include intentional subversion or presentation attacks. “They’re trying to submit a sample that will not work in the intended manner. And that can be achieved with maybe cosmetics or face masks. Maybe I could try to break into your phone by appearing like you,” Grother offered.

Another recently added benchmark for face analysis involves age estimation. NIST expected to issue a report by the end of 2023. “Can you just look at a photo and say how old is the person in the photo without saying who they are? That’s got new uses in online safety for children, keeping them away from alcohol or pornography.” Researchers also added a demographics benchmark in 2019 and expected a new report on that capability in late 2023 as well.

The ability to detect images morphed together with “graphical techniques” or neural networks is another important benchmark for face analysis. “It’s possible to combine your face and my face into one photo. If you look at that photo, it sort of resembles both of us, and if you can get that photo onto an ID card, or into a phone or into a passport, then two people could use that passport,” Grother elaborated.

The original NIST program, the Face Recognition Vendor Test program, started nearly 25 years ago with the U.S. Defense Department’s counterdrug efforts to evaluate prototypes funded by the U.S. government. Now, NIST uses an “open door” approach in which vendors worldwide can voluntarily submit their algorithms for testing.

Comments