AI Will Reboot the Army's Battlefield

Artificial intelligence, or AI, will become an integral warfighter for the U.S. Army if the service’s research arm has its way. Scientists at the Army Research Laboratory are pursuing several major goals in AI that, taken together, could revolutionize the composition of a warfighting force in the future.

The result of their diverse efforts may be a battlefield densely populated by intelligent devices cooperating with their human counterparts. This AI could be self-directing sensors, intelligent munitions, smart exoskeletons and physical machines, such as autonomous robots, or virtual agents controlling networks and waging defensive and offensive cyber war.

And it won’t be just the virtual agents that wage direct combat. Intelligent devices will fight their counterparts on the enemy’s side. Active protection systems such as missile defenses will be smarter and more ubiquitous, and this trend will extend to intelligent countermunitions targeting the adversary’s weapons. Intelligent hunters will seek, discover and defeat enemy sensors.

“In this battlefield richly populated by intelligent things, humans will be essentially one of the species of intelligence,” says Alexander Kott, chief scientist at the Army Research Laboratory (ARL). “They will be a very unique, special, important and powerful species on the battlefield, but nevertheless, we humans need to reconcile ourselves fairly soon that we are becoming part of a broader universe of intelligent things.”

This evolution will be front and center on the battlefield. When humans are not the only intelligent life on Earth, they will have to learn how to relate to their creations, Kott notes. On the battlefield, the relationship may range from command and control to outright partnership.

Today, most ongoing AI research focuses on exploiting recent deep learning breakthroughs, particularly for commercial opportunities, Kott states. But these multibillion-dollar opportunities largely occur in benign environments, where the primary AI objectives are improving reliability, reducing risks or errors, and addressing liability concerns. Only a fraction of this research will benefit the defense community, he warrants.

Other commercial AI research, especially in virtual or augmented reality, will involve entertainment. Kott points out that this research will aid the defense community to an extent because of its relevance to training. But the rugged and dynamic nature of the battlefield requires unique approaches to military AI development.

Kott says a large segment of the ARL’s research portfolio focuses on what the lab defines as essential programs, and about half of those heavily depend on AI. Top among the lab’s AI research priorities is autonomous intelligent maneuver, which includes autonomous mobility. Today, military robots must be largely teleoperated. While the commercial sector has begun to explore self-driving vehicles, these are chiefly designed to operate on well-defined roads. The Army also is working on vehicles that can run autonomously in convoys or on unimproved but well-ordered roads.

But that pales in comparison with force requirements for autonomous Army vehicles. They must be able to perform in complex environments, such as rough terrain with uneven surfaces that include trenches or artillery craters, fallen trees and dense vegetation. Out of the wild, these vehicles must be able to function on paved urban streets littered with rubble, broken vehicles and barbed wire. “What we need is autonomous mobility for military vehicles in this kind of extremely complex terrain—at tactically meaningful speeds,” Kott emphasizes.

Presently, teleoperation in complex terrain tends to be limited to just a few kilometers per hour, he points out. The Army needs rapid movement amid enemy operations, which adds up to tens of kilometers per hour. This movement also may include climbing over barricades or other large obstacles. And autonomous vehicles must be capable of self-management for logistics such as recharging or refueling and for righting themselves when overturned.

Vehicles also must be capable of adversarial reasoning. They must be able to think about where to move in terms of battlefield conditions such as concealment or line of fire. And they have to anticipate enemy deception measures such as hidden tiger pits or other booby traps.

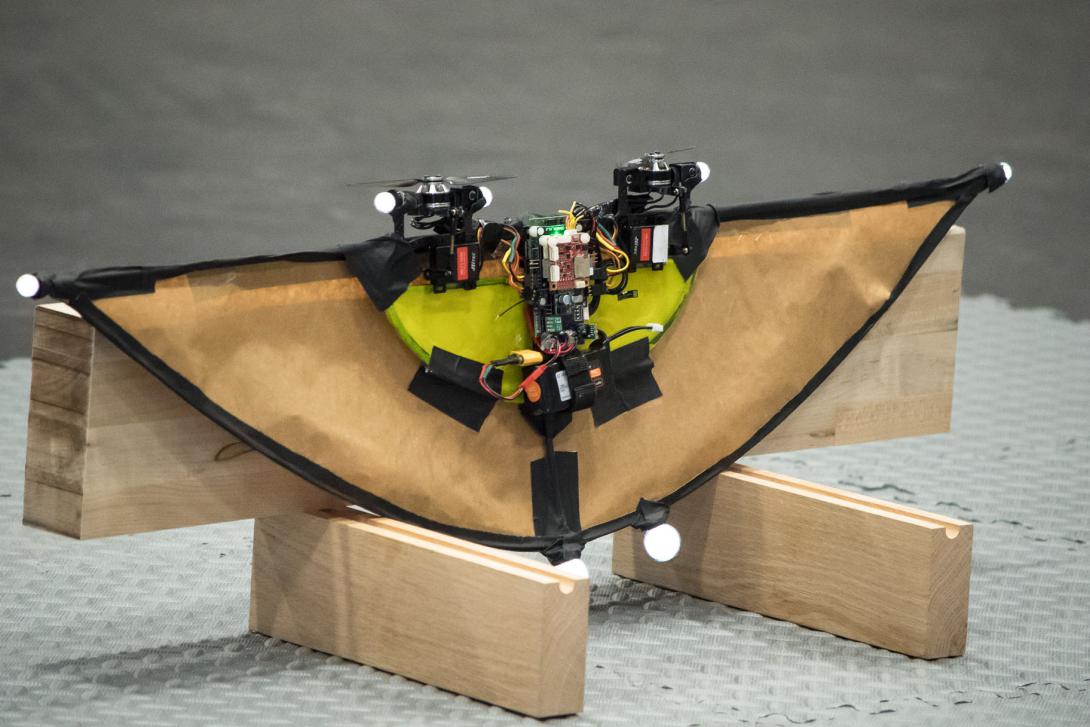

Another of the lab’s research concerns is what Kott calls distributed collaborative engagement in highly contested environments, which are defined by having active and capable adversaries. At the heart of this effort is the development of intelligent munitions that can fly over extended ranges and defeat challenging targets selected by a human in the decision loop. These munitions would avoid area defenses, including both static and active protection systems, as they approach their target. The intelligent munition would be able to understand how to avoid these protective actions. And it would collaborate with other similar warheads to converge on a well-protected target.

The laboratory’s third priority AI application involves cyber and electromagnetic activities, or CEMA, in complex environments. Kott relates that the modern battlefield is saturated with computing and processing devices that are susceptible to a variety of CEMA attacks. Dealing with these attacks will require bringing AI to bear, he states.

AI would help defend communications and computing and intelligence, surveillance and reconnaissance (ISR) systems from CEMA attacks. “Imagine something like an intelligent cyber defense agent that resides on networks, on a variety of devices on those networks, and continually observes the situation in and around each device and [produces] a number of actions necessary to defend those networks and devices,” Kott offers.

He adds that these AI-driven defensive actions will include concealment and camouflage, in which devices are hidden or disguised as something other than their actual identity—a “cyber electromagnetic sense of camouflage.” Because these actions would have to take place within milliseconds, they largely would operate autonomously under human-written rules, Kott says.

The ARL’s fourth research area offers the potential for the most exotic application of AI in the force, he asserts. Human-agent teaming would provide a direct link between AI systems and humans on the battlefield.

“Warfare is, was and will be a human affair—a contest of human wills, human intellects and human endurance,” Kott says. “Intelligent things on the battlefield will be numerous and capable, but they will be always operating in conjunction with humans under their control.

“That means the intelligent things will have to understand humans, and humans will have to understand intelligent things. They will have to be able to form teams to execute complex tasks together under very difficult conditions in a high operating tempo,” he declares.

In its human-agent teaming, the ARL is developing a number of technologies while pursuing basic science in how humans can better understand intelligent devices. The reverse also holds true, and the lab is exploring “how to form and maintain a cohesive, well-performing team of humans and intelligent things,” Kott reports.

One example of this teaming research involves interactions between humans and AI devices. Today, these interactions are “surprisingly limited,” Kott describes. People can use voice-activated commercial search engines to extract information from the web, but this technology has evolved from decades of work. It can handle only a single question at a time, and the specific answer must exist somewhere on the web.

Kott states that present-day AI generally cannot support a continuous conversation with a human while focused on a particular joint task. The AI must maintain the context of the conversation, remembering the questions and answers throughout and understanding their meanings and implications. And this must be done in running time.

Overcoming these limitations is the focus of ARL research in human-agent teaming. “We’re working on these robust interactions between AI and humans by natural language in a dialogue-type style with clarifications and exchanges,” Kott says. Both parties must be able to understand each other and the intent of a question in the context of previous questions and answers—as well as in the context of physical actions, he points out.

The AI also must be able to explain itself to humans, Kott offers. He notes that the ARL is part of the Defense Advanced Research Projects Agency’s (DARPA’s) ambitious effort to develop explainable AI, but the lab is pursuing other ways to make AI more understandable to humans as well. The ARL has had some success in transparency-based interfaces, in which AI can make itself more clear to its human “teammate” without any explicit explanation. This mirrors many human-to-human interactions, in which people make themselves transparent to others without explicit explanations, Kott observes.

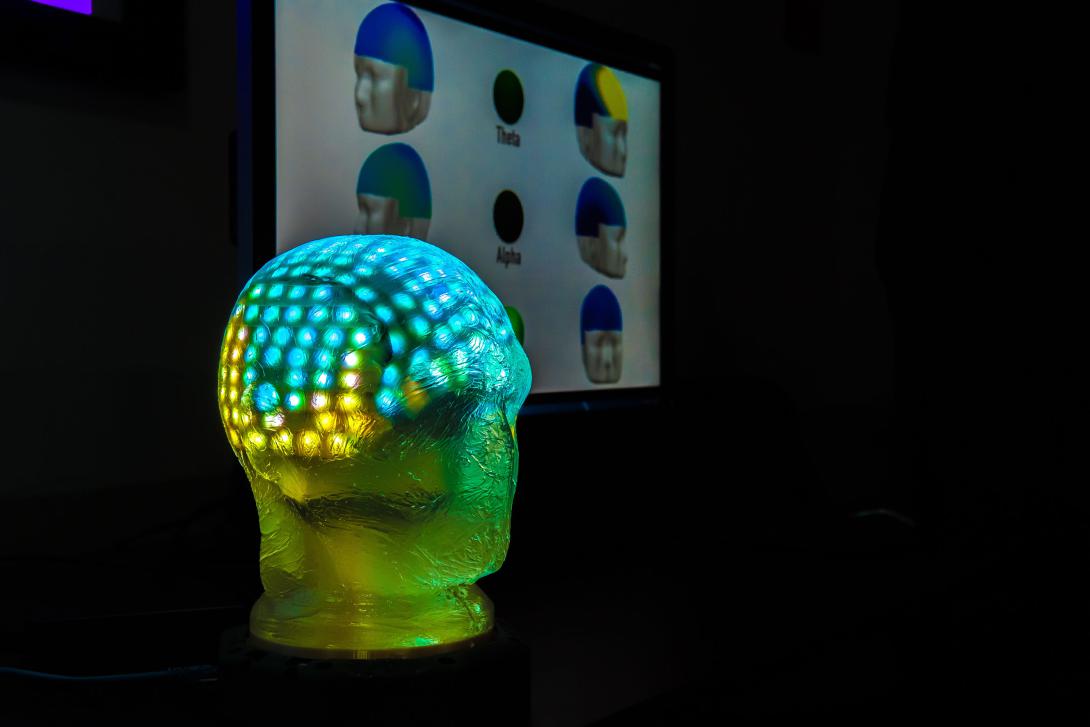

AI also must understand the human mental state, and the lab has succeeded at research that allows an AI system to detect physical clues from humans. This work has gone as far as reading electroencephalogram-type input from people to determine what task they want the AI system to perform, Kott reveals. Ultimately, AI would literally be able to read human minds, he predicts.

Related work would allow people to teach AI systems, Kott continues. The ARL has achieved significant advances in human-in-the-loop reinforcement learning, in which the lab has drastically reduced the number of examples needed for a machine learning system to learn. This is accomplished by giving human feedback to the AI system on whether its actions are correct.

This research is vital because machine learning now depends on a large number of examples or repetitions, Kott notes. “We can drastically, by many orders of magnitude, reduce the number of the required examples or repetitions by providing human feedback,” he explains. “In this way, AI is effectively being taught by humans.”

All of these potential advances are not just around the corner, however. Several challenges must be overcome to attain these goals. To develop vehicles capable of autonomous intelligent maneuver, the ARL must break from the existing autonomous vehicle systems that characterize commercial automobile control to focus instead on battlefield conditions. This AI must be designed for unstructured operation in which chaos is the rule, not the exception, Kott says.

Machine learning is another challenge. He points out that it principally depends on large, orderly, accurate and well-labeled datasets. But Army-relevant machine learning must work with datasets that differ from these descriptions dramatically. They must be observed and learned in real time, under great constraints, and often benefit from only a few examinations. Adversaries frequently change techniques and materiel, so observations are few and fleeting and rife with potential errors.

Kott describes this challenge as D5—dinky, dirty, dynamic, deceptive data. A battlefield AI system may have only a few dozen or hundreds of examples to learn from, while today’s machine learning typically relies on millions of examples. The military data will be uncurated, full of noise and mistakes. It will be characterized by rapid changes in observations and the environment. And adversaries will do their best to deceive sensor systems to corrupt their data findings. Today’s machine learning is easy to deceive, he points out, and clever adversaries can exploit it with deadly consequences.

Another problem is AI’s inability to communicate with humans. The research effort for human-agent teaming must overcome the challenge of AI being unexplainable to humans. Kott says the ARL’s achievements in this area only scratch the surface of AI’s opacity to humans, and further research will be necessary to bring AI’s picture into greater clarity.

Nevertheless, the march continues toward AI on the battlefield. Kott anticipates a change on par with the revolution triggered by gunpowder, which supplanted human muscle power with chemical energy. That development altered society in many ways, and he foresees the augmentation of the human brain with AI having a similar profound effect.

“We may be facing a potentially new revolution in military affairs,” Kott declares. “In the large scheme of things, the appearance of intelligent artificial beings on the battlefield is mind-boggling and is nothing short of revolutionary.”

Comment

It is encouraging to see that

It is encouraging to see that the human-machine dataflow bridge is getting some attention from DoD. I have implemented a situational awareness platform into a tactical mission set. Information flow in both directions is a major choke point in effectiveness. Current system data input procedure require user time and attention which are unrealistic within the tactical realm. Also, data abstraction models are not properly formatted for a user with already saturated perceptual channels.

Comments