Decentralized Defense: How Federated Learning Strengthens U.S. AI

The Great Power Competition is no longer confined to traditional warfare. It plays out in data, algorithms and artificial intelligence (AI). As adversaries weaponize misinformation and cyber attacks escalate, the United States faces a new frontier: how to develop smarter systems using machine learning without compromising its most sensitive data.

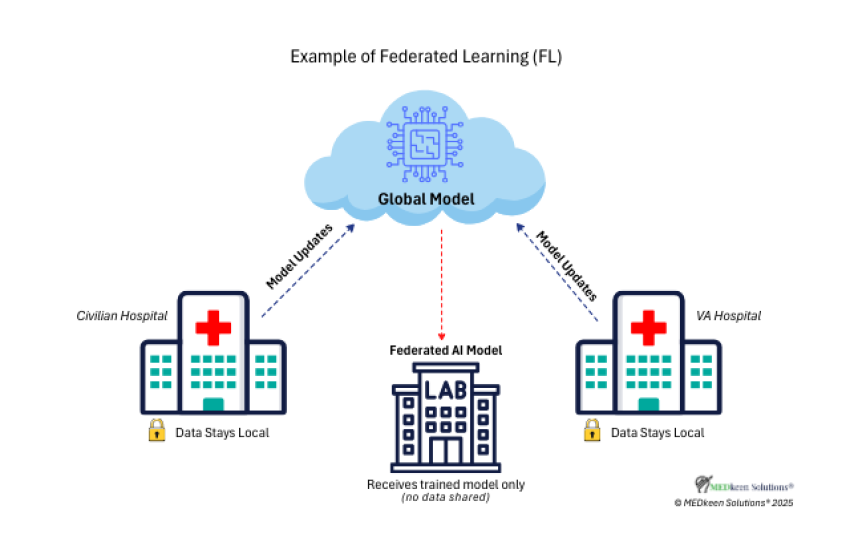

Federated learning (FL) addresses this challenge head-on. By allowing AI models to train across decentralized networks without transferring raw data, FL strengthens security, protects privacy and preserves operational control. These are qualities essential to national security.

As noted in SIGNAL Media’s The Cyber Edge (October 2023), with China accelerating its AI capabilities, secure and scalable innovation is no longer just a technical goal. It is a matter of national preparedness. From DeepSeek’s challenge to OpenAI and Google to Europe’s advance in its own sovereign AI initiatives, the stakes could not be higher. The race for technological leadership is now a matter of global influence, economic power and national security.

How Federated Learning Works

Traditional AI models rely on a centralized and trusted training environment, assuming all contributors are reliable. However, in real-world government systems, where internal threats and external adversaries are always a risk, that assumption does not hold. As Gafni et al. (2021) noted, even a single compromised participant can poison the model’s learning process. FL introduces safeguards that help detect and isolate these attacks, supporting greater resilience across homeland security, continuity of operations and continuity of government environments.

These threats reinforce a core federal cybersecurity principle: zero trust. In a zero-trust environment, no internal or external actor is assumed to be safe by default. FL supports this model by minimizing shared attack surfaces and enabling systems to validate updates without transferring sensitive data. Even in disconnected or multi-agency settings, FL offers a privacy-preserving architecture aligned with zero-trust security frameworks. This kind of setup works well when agencies or teams need to work together but cannot share data directly, whether because of policy, security or simple practicality. As federal agencies modernize their infrastructure, FL’s decentralized strengths become increasingly valuable in sensitive and regulated environments.

Federated Learning in Sensitive and Regulated Domains

FL can operate within government, defense, health care and utility networks, enabling private data to remain local while still contributing to enterprise-scale model development. This decentralized approach is especially well-suited for agencies that must comply with privacy regulations or handle classified information.

According to Check Point Research, U.S. utilities experienced a 70% surge in cyber attacks in 2024 compared to the previous year, underscoring the growing vulnerability of critical infrastructure.

Utilities today rely heavily on AI-driven monitoring, predictive maintenance and cyber threat detection to keep systems running safely and efficiently. These models are often trained on sensitive operational data collected from distributed systems such as power stations, smart meters and Internet of Things-connected grids. Centralizing that data introduces risk, especially as attack surfaces expand. FL offers a secure alternative. It enables each site or system to train locally while sharing only model updates, not raw infrastructure data. In high-risk sectors like utilities, FL supports collaborative AI development without compromising resilience, control or operational security.

Safeguarding Sensitive Systems: Federated Learning in Action

Military, Veterans Affairs (VA and Industry Hospitals

Military, VA and civilian hospitals are adopting AI to support diagnostics, clinical decision-making and operational efficiency. These efforts often involve training models that analyze medical images, laboratory results or even workforce data to detect disease, recommend treatments or improve triage. However, using this data presents privacy and compliance risks. Regulations such as HIPAA, the Health Insurance Portability and Accountability Act of 1996, and FedRAMP, the Federal Risk and Authorization Management Program, make it difficult to centralize patient information, especially as virtual care platforms and mobile apps increase data exposure.

FL offers a secure path forward. It allows hospitals to collaboratively train AI models without transferring raw patient data. Even organizations with different systems and policies can improve shared models without exchanging protected health records.

This model is already in use across parts of the private health care sector. In some cases, hospitals have trained shared diagnostic models through FL without accessing each other’s patient records.

“Certain pathology departments within competing private hospitals ... train a shared diagnostic algorithm on a combination of their respective data sets.”— ParraMoyano, Schmedders and Werner, 2024

For instance, the Department of Veterans Affairs could work with a civilian hospital network to improve diagnostic accuracy for early-stage disease detection. With FL, each institution trains the model locally using its own patient images or test results. No records are shared, but both benefit from stronger, more diverse model performance, meeting privacy requirements without sacrificing innovation.

Resilient AI for Emergency Response and National Continuity

The Department of Homeland Security and the Department of Defense share a critical challenge: using AI to enhance national readiness while protecting the sensitive data that supports their missions. Whether coordinating cyber threat response, managing disaster logistics or ensuring continuity of operations, both agencies rely on distributed, often classified data to make real-time decisions. However, traditional AI models require bringing that data into one place, which increases risk in environments guided by zero trust and strict access controls.

As SIGNAL Media’s The Emerging Edge section noted in January 2024, the intelligence community is already developing AI frameworks with cybersecurity at the core momentum that FL can build on by enabling agencies to collaborate without exposing raw operational data.

Collaboration between the U.S. Department of Homeland Security (DHS) and Department of Defense (DoD) is already well established. Programs like the National Disaster Medical System coordinate large-scale medical response, while the Joint Cyber Defense Collaborative brings together federal partners to defend against digital threats. These efforts reflect a shared mission: protecting national continuity under pressure.

FL can support that mission by allowing agencies to train models before the next crisis strikes. Imagine DHS, the Federal Emergency Management Agency and DoD working together to create an AI system that predicts patient surges, resource shortages or cyber disruptions. With FL, each agency could use lessons from past operations to train the model locally without ever transferring patient data, classified logistics or internal system activity. The result is a secure and ready tool trained in advance, built for real-world use and deployable when every second counts.

Whether supporting preparation or active response, FL brings both foresight and flexibility to emergency management. It is a practical step toward resilient, privacy-preserving AI that serves national security without compromising the data that protects it.

The Future of Federated Learning in National Security AI

From protecting patient data to helping agencies prepare for the unexpected, FL shows that secure innovation is not just possible; it is necessary. It gives teams the ability to act quickly, work together and stay within the lines of what security and trust demand.

As AI continues to shape how the nation prepares for and responds to threats, FL stands out as a practical, privacy-first approach. It allows agencies to build smarter tools, share insights responsibly and keep control of the data that matters most.

Looking ahead, both government and industry will need to invest in pilots, shared standards and real-world testing. The sooner we build together, the stronger and more resilient our systems become.

FL is not just about protecting data. It is about protecting mission trust, and national readiness.

Khalilah Filmore is the founder and CEO of MEDkeen Solutions, a consulting firm specializing in emerging technologies and operational strategy for health care and government sectors. She serves as vice president of membership for AFCEA Orlando and holds a leadership role with Women in Defense Space Coast Chapter.

Comments