BRIAR Program Tackles Thorny Biometrics Challenges

The simply stated goal of the Biometric Recognition and Identification at Altitude and Range (BRIAR) program is to perform accurate and reliable biometric identification across a wider range of imagery collected from a wider selection of sensors. But that one goal requires overcoming multiple challenges: achieving whole-body biometric identification at long distances and from elevated angles as well as fusing that data with other biometric signatures such as facial recognition.

BRIAR is a four-year, three-phase effort to deliver end-to-end software systems capable of detecting and tracking individuals under severe imaging conditions and extracting and fusing biometric signatures from the body and face. The research is intended to support missions such as counterterrorism, protection of critical infrastructure and transportation facilities, military force protection and border security.

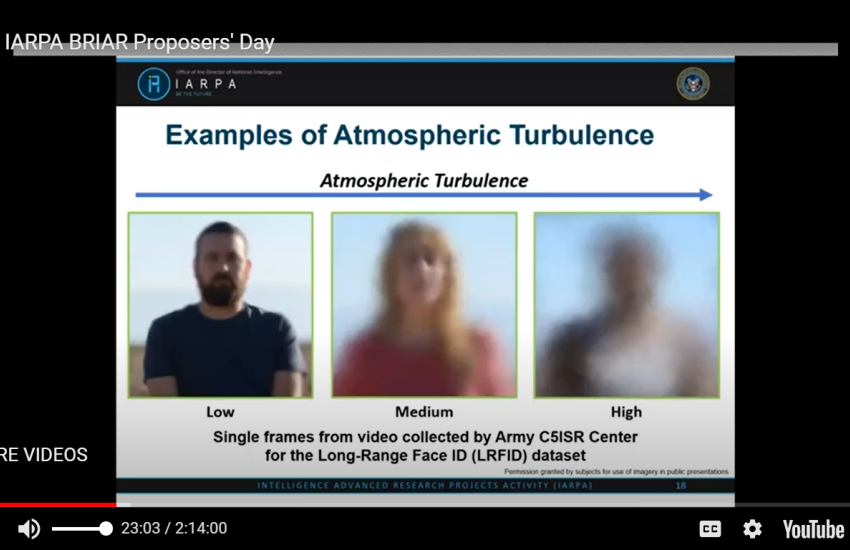

Many intelligence agencies need to identify individuals from more than 300 meters away, through atmospheric turbulence, or from elevated or aerial sensor platforms such as unmanned aerial vehicles or watchtowers with views at unusual angles, according to the Intelligence Advanced Research Projects Activity (IARPA) website for the BRIAR program. Longer ranges or higher altitudes can hinder image quality because of motion, resolution or atmospheric conditions.

The long-range, elevated and angled viewpoints present challenges for modern biometric systems, explains Lars Ericson, IARPA’s BRIAR program manager. “Both of these conditions are not commonly found in current research community or nongovernment biometric use cases.”

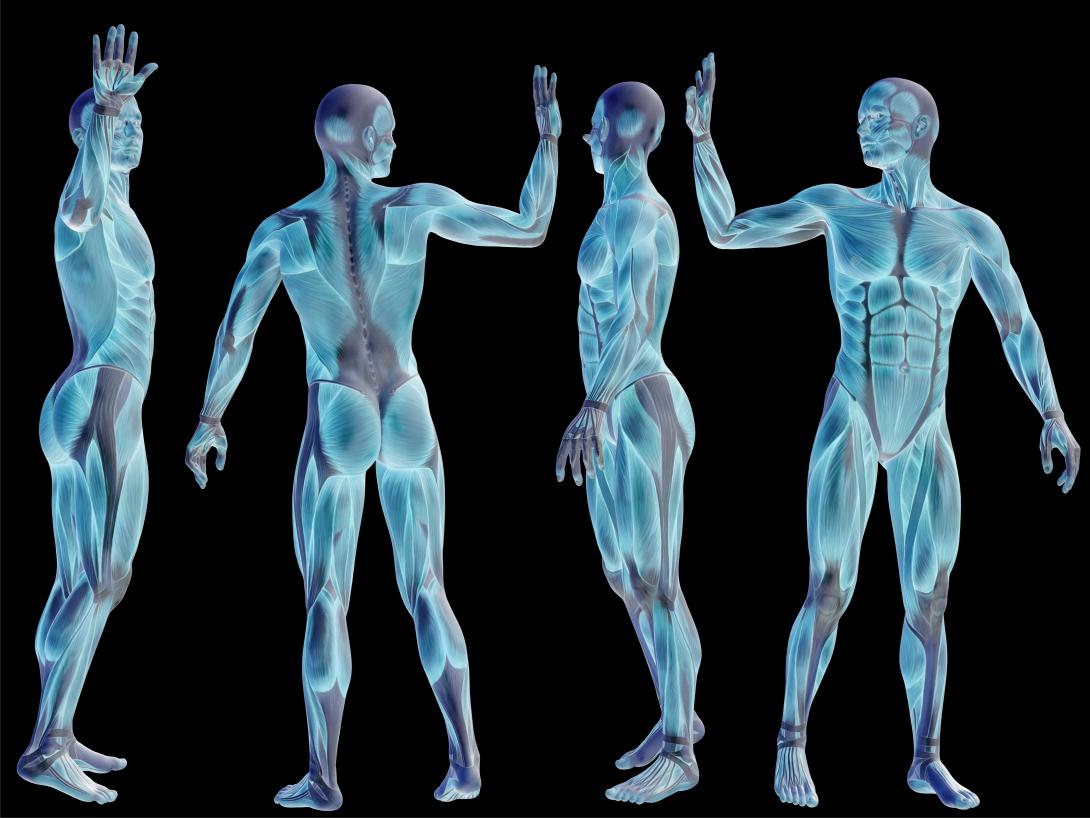

Adding to the challenge is that whole-body biometric technologies have not yet progressed as far as facial recognition, so the researchers need to improve those fundamental capabilities before pushing for success under more challenging conditions. Whole-body identification can include the way a person walks, joint movements, body shape and/or specific limb measurements.

“Although face recognition is mature and … the accuracy is very high in passport-style imagery and those kinds of conditions, whole body or body signatures like gait are much less mature. They’re much less explored,” Ericson says. “The benefit that BRIAR is trying to bring is to not just rely on the traditional biometric modality like face recognition, but leverage all of the biometric signatures that one might observe in video images using recent advances in computer vision to hopefully gain the ability to identify individuals accurately, even in this noisy, low-resolution imagery.”

Although the program is only months old, Ericson says it already can tout some success. First, the test and evaluation team led by Oak Ridge National Laboratory has recruited hundreds of volunteers to contribute unique, mission-relevant images to support the research and eventually evaluate the newly developed algorithms. One of the challenges of the program—and the primary reason so little research has been done—is that ample biometric data from longer distances, higher elevation and odd angles simply is not available for researchers to use.

“This test and evaluation team to date has been able to collect data across dozens of different sensors in these challenging imaging conditions from over 900 consenting volunteers. This data is unique. It hasn’t existed before. And now it will really empower the kind of innovative research that’s going to be occurring within the program,” Ericson reports, noting that the data is governed and approved by a human subject research institutional review board.

Secondly, he says, research programs typically take at least a year to provide meaningful contributions to the scientific literature, but BRIAR researchers were publishing peer-reviewed scientific papers in half that time. Those articles are available on the BRIAR program page by clicking the Google Scholars link.

One paper, for example, offers a detailed assessment and comparison of different atmospheric turbulence simulation research approaches. “This is an active area of work, and one of the teams has contributed to summarizing and comparing these different techniques so they can be built upon and improved further,” Ericson says.

Another examines how turbulent imaging conditions affect the machine learning algorithms and codes that occur when face images are translated into a machine code used for matching. The team “found some unique effects that occur and ways in which they can be improved upon and addressed in doing biometric machine learning algorithms,” Ericson adds.

BRIAR Program Teams

- Accenture Federal Services

- Intelligent Automation

- Kitware

- Michigan State University

- Systems & Technology Research

- University of Houston

- University of Southern California

- Carnegie Mellon University (focused research)

- General Electric Research (focused research)

Such contributions to science are the primary focus of the initial 18-month phase of the program, which ended in May. It is a proof-of-concept phase in which researchers focus on fundamental foundational research of the various subcomponents involved. “In phase one, our approach has been to evolve and mature the key aspects of the research and engineering in a methodical manner. We’re really exploring this topic area. It’s the first program tackling it in the kind of depth and scale we think is needed to provide innovations,” Ericson asserts.

The second phase, also 18 months long, is designed to integrate the various components to provide accurate and reliable data. The teams will deliver interim versions of their systems for evaluation and feedback while achieving more operationally relevant objectives like near real-time video processing.

The final phase will last one year and will focus on optimization of the systems and components. The teams will work toward performance goals capable of supporting IARPA’s transition partners in terms of their mission needs and practical considerations, Ericson says. “For example, in phase three, one of the objectives is to develop versions of their systems that could operate on the edge, on these platforms, to address both bandwidth limitations or computationally restrictive situations. Those kinds of things add increased value to the capabilities that we hope to provide to our partners,” Ericson elaborates.

The program attracted nine teams from industry and academia. The program manager says those teams have been somewhat consistent conceptually regarding the necessary subcomponents for their systems. He describes the capabilities those subcomponents need to provide, including detecting individuals in a video scene; associating those in time throughout the video; extracting the biometric signatures that are present; encoding those signatures and then infusing and matching them.

That said, though, the teams are taking different approaches. Some, for example, seek to identify a person by their gait. “Others are incorporating the actual physical shape of the body using a dense mesh kind of shape of the body as a signature, while others are using the anthropometric measurements—your arm and leg measurements and how your joints move. Some are doing combinations, but there’s some variety there in how they’re exploring those techniques,” he says.

Additionally, the research teams are taking different approaches to image enhancement and turbulence mitigation, Ericson says. “For example, some teams are very strongly rooted in fundamental physics techniques in terms of how to address these imaging noise conditions. Others are leveraging more current, more modern, machine learning generative adversarial network-type techniques to learn these noise conditions from a data science perspective.”

The program manager describes seven of the teams as “full teams,” meaning they are pursuing the full range of program objectives. Two teams, led by Carnegie Mellon University and General Electric Research, have a narrower focus that could ultimately benefit the overall system. Carnegie Mellon University is evaluating methods of “producing or modifying or adapting these complex deep neural networks to run in edge processing or restricted computation environments, at a more fundamental level.” The idea is to provide solutions to either “take existing networks and modify or prune them or use them to develop leaner versions” or to “build up the algorithms from the ground up” to operate in lean environments.

General Electric Research is partnered with the Massachusetts Institute of Technology and is exploring “how humans view these degraded images and make decisions about biometric matching from a human perception perspective” and whether that can inform designs for neural networks performing computer vision tasks.

The insights gained from both teams will be shared with the others and will contribute to the phase three objectives requiring the teams to build systems capable of operating in constrained environments.

Comments