Neurons Make the Robot, NRL Says

YouTube videos of robots running and jumping can be pretty persuasive as to what autonomous technologies can do. However, there is a large gap between robots’ locomotion and their ability to handle and move objects in their environment. Programs at the U.S. Naval Research Laboratory are examining how to close this capability gap and improve the functionality of robots and other autonomous systems.

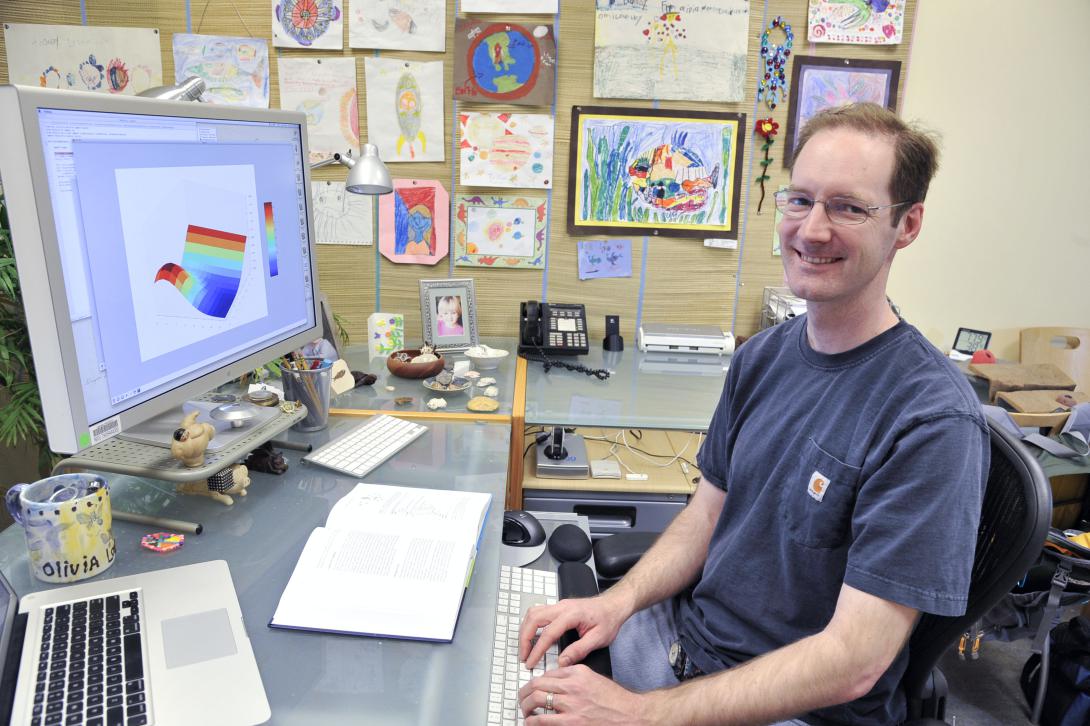

Autonomous capabilities have advanced, especially in the last 10 years, but robots still have a hard time performing ad hoc motions, particularly manipulative movements using a robotic arm or hand, says Naval Research Laboratory (NRL) roboticist Glen Henshaw.

“I think we’re going to get to the point here pretty quickly where we have robots that are as good as humans at being able to understand the world. But they’re going to be really lousy at being able to move around in the world and doing dexterous tasks like we want them to do,” he states.

Henshaw, who has a doctorate in aerospace engineering from the University of Maryland, College Park and has been researching robots for the past 15 years at the NRL, cites that there are generally three ways developers propel a robot. Two of these fall short in some area, and one is just beginning to be developed.

One technique is to hand-design all the algorithms used to make a robot walk or move. “That method works really well, as long as you know what the environment looks like and what the robot looks like, and those things don’t change very much,” Henshaw says. The Defense Advanced Research Projects Agency’s (DARPA’s) BigDog quadruped robot was designed that way. For Boston Dynamics, the company that built the robot, “it actually took them about 20 years of research to figure out what those algorithms needed to look like,” Henshaw says. “[That method] is what we call brittle, meaning if you change any of the assumptions, or if you want the robot to do something different, or the environment looks different, then they don’t tend to work so well.”

Another method scientists use to make robots move is called model predictive control, which describes mathematically in equations what a robot should do and then computes the solutions to those equations. “That works pretty well, as long as you’ve got a whole lot of computing power, and as long as you get your equations right,” Henshaw says. “But what I’m really interested in, actually, is the way that humans and animals learn. And it’s pretty clear that whatever we’re doing in our brains, we’re not explicitly solving huge systems of mathematical equations. We’re using some sort of learning algorithm.”

The third method, which is probably the hardest way to make robots perform complex manipulation tasks, is through machine learning algorithms. The problem there, the roboticist says, is that all the available deep learning algorithms require extremely large data training sets.

“For instance, if you have a deep learning algorithm to learn to recognize cars in pictures, you’ll go to the Internet and get literally a million pictures,” Henshaw shares. “Some of them have cars in them and some of them don’t. And then you’ll use that set of images to train your algorithm. But if you want to train a robot to play basketball, each time you try to shoot the ball into the hoop, that is one instance, and I would need millions of those instances in order to make deep learning work. And I don’t have any way to collect millions of trials of a robot doing something.”

Instead, the NRL is pursuing the development of data-efficient machine learning. Experts describe this as the ability to learn in complex domains without requiring large quantities of data. “What we’re trying to do is figure out how to use machine learning algorithms but make them much more data-efficient so that they can learn with fewer trials than what our current generation of machine learning algorithms appears to need,” he says.

As to how scientists will accomplish that, “The real answer is: Nobody knows yet,” Henshaw admits. “It is wide-open research.”

The NRL is pursuing two relevant areas of study: tailoring neural networks for specific tasks so they can be smaller without sacrificing accuracy and developing active interrogative learning algorithms.

With neural networks, the NRL is trying to understand how to customize the calculations of individual neurons to be more aligned with the kinds of motor functions robots are required to carry out. “You can change the [output] of each neuron just a little bit so that they more accurately learn to perform robotic motor tasks,” Henshaw suggests. “If you have some way of tailoring what each neuron calculates so that it was pertinent to a task, you might be able to get away with having a lot fewer of them. So instead of having thousands or tens of thousands of neurons in your network, you might get away with tens or hundreds. And the fewer neurons you have, the smaller the training set you need. They’ll converge more quickly with less data.”

For optimal design of active interrogative learning algorithms, the NRL turns to human experience. “If you think about the way a baby learns to pick things up, each time, they’re not just randomly doing some motion and then seeing what happens. They’re doing what is called directed learning, focusing on trying to learn to pick up a specific thing. And each time they try, it’s like a little experiment where they modify the way they use their hands and their arms, and they’re directing themselves on learning how to do some task,” Henshaw says.

In comparison, current deep learning algorithms are “a passive observation of the world,” he states. Given machine learning’s reliance on millions of data points, an algorithm eventually will get the data it needs for a task if the dataset is large enough. However, the size of the dataset can be a limiting factor.

“You’ll get exactly the right images just because you have enough of them,” Henshaw says. “But if you think about trying to learn the way a human does, maybe you can get smarter about what kind of data you collect so that it’s more informative, and then you can speed up your learning. So that’s the other thing that we’re trying to do. We’re treating it as an optimal design of experiments problem. Instead of just trying a bunch of random stuff, we’re trying very directed motions and hoping to get much more useful data out of them.”

On top of that research, the NRL is looking at neuromorphic computing using a specialized processing unit. This novel computer hardware, a neuromorphic chip, is different from what is in a standard computer. “It works more like the way we think the brain works,” Henshaw shares. “It is basically a hardware instantiation of a neural network.” Because the NRL does not have the ability to fabricate chips on-site, it is working with semiconductor chip manufacturers to access the neuromorphic chips they are developing.

Henshaw is optimistic that research efforts into neuromorphic computing will continue to grow. “There are other researchers that are also using these chips for different applications, and we’re not formally working with them, but we’re all sort of in the same small community,” he says. The NRL also is considering collaborations with universities, “but we haven’t actually formalized any agreements yet.”

Until now, robotics research has mostly centered on machine vision, creating a gap in dexterity capabilities. “I think it’s a really interesting research problem because it’s been really almost ignored by the research community for a long time,” Henshaw ventures. “We focused on understanding the world, and we haven’t really focused so much on being able to operate in the world.”

The roboticist cites an example that assistant professor Sergey Levine of the University of California, Berkeley, pointed out about a photo from world chess champion Garry Kasparov’s 1997 match against IBM supercomputer Deep Blue. “If you look at a picture of that match, you see Kasparov on one side of the chessboard, and on the other side of the chessboard, there’s a human being with a computer monitor,” Henshaw notes. “And the takeaway there is, it turns out to be much easier to program a computer to play grandmaster-level chess than it is to program a robot to move the chess pieces around on the board.”

“It is crazy if you think about it,” he says, laughing. “We’ve spent decades trying to figure out the way the higher-level processing in the brain works and being able to replicate that in a computer, and we’ve spent a lot less time trying to improve the way robots can manipulate chess pieces. I think if robots are going to fulfill the sort of future that NRL and [the U.S. Defense Department] want them to have, we’re going to have to get a lot better at both designing them mechanically and teaching them how to learn to do tasks. If we don’t solve it, I think robots probably are not going to fulfill the future that they have been advertised as having.”

Comments