On Point: Q&A With Col. Kathleen Swacina, USA (Ret.)

What does HISPI hope to accomplish with Project Cerebellum?

Ultimately, we’re trying to achieve safe, secure, trustworthy AI. Sometimes we’ll add “responsible,” but if it’s safe, secure and trustworthy, then you’re doing it responsibly. We want AI to serve humanity instead of harming us.

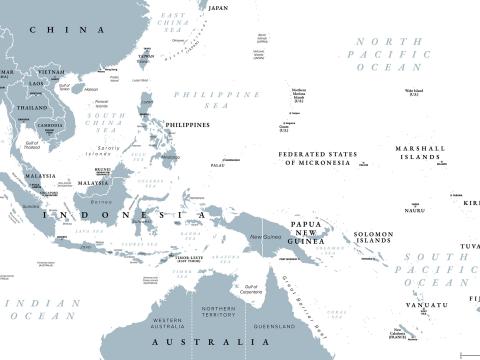

We have developed a model over the past 18 months that includes policies and directives from governments around the globe. We’ve taken the White House policy, European Union, Australia, HIPAA [Health Insurance Portability and Accountability], PII [personally identifiable information], NIST [National Institute of Standards and Technology] and ISO [International Organization for Standardization] frameworks, and we’ve pulled those policies together in a model so that you have a basic check sheet to develop AI ethically and within those policies.

Who should adopt the model?

We would like to see governments adopt our model and have industry use it so that industry can self-assess because government does not have full oversight of what industry’s out there developing. Regulation is OK. It’s necessary in some cases, but it’s typically five to 10 years behind. So, what makes more sense is some sort of public-private partnership. It’s not government-led; it’s a partnership. They can still create their own regulatory framework, but this is more collaborative.

The framework we’re using is based on the NIST AI Risk Management Framework, which is taxpayer-funded. The government’s paid for that, so it only makes sense for the government to be an early adopter.

What are the next steps?

We’re trying to get the word out and get exposure and usage of this tool, Trusted AI Model Score, or TAIMScore, which is available for free at ProjectCerebellum.com. We want to see more people look at it and say, “OK, this makes sense,” and start using the model, checking the boxes and staying within the guidelines.

We have panels at the TechNet Fort Liberty Symposium and Homeland Security Critical Infrastructure Conference this month. We’re trying to bring this concept to the flagship events, and we seem to be generating a lot of interest.

What are the challenges to developing responsible AI?

Right now, our challenge is that everybody’s trying to adopt AI and running down the road at 90 miles an hour trying to build the plane as it’s taking off, and they’re not catching on to the policies that are out there trying to catch up to the development process. It’s exciting, but it could almost be like the first commercial plane trying to take off, and they’re still fixing tires or installing brakes.

Many people are treating AI like a new buzzword. We’ve seen that with zero trust. and other technical verbiage. But AI has the potential of being something more destructive. That’s why we need to get ahead of what’s being developed out there in industry.

We’ve already seen nation threat-actors using AI nefariously. This is one of the reasons we should have a global look at ethical AI development.

What examples would you cite?

The Chinese and Russians are both using AI to scam individuals, stealing identifications. AI is being used for aging processes on children’s photos, a kind of deepfake where someone gets a photograph of a child, performs an aging process and then steals their identity.

We’ve also seen sextortion. There are actually AI platforms that allow you to take a photo of someone and literally convert it to a naked photo that is not real.

This column has been edited for clarity and concision.

Comments