Augmented Reality Supports Space Advances

NASA’s Jet Propulsion Laboratory is using a 3D visualization tool to design innovative space probes, including the Mars 2020 Perseverance Rover and its Ingenuity helicopter. The same tool can help researchers plan work in space’s complex environment.

The mixed reality, computer aided design (CAD) 3D visualization tool is known as ProtoSpace. It has been crucial to the lab’s collaborative development of spacecraft, says the technical lead for ProtoSpace, Benjamin Nuernberger.

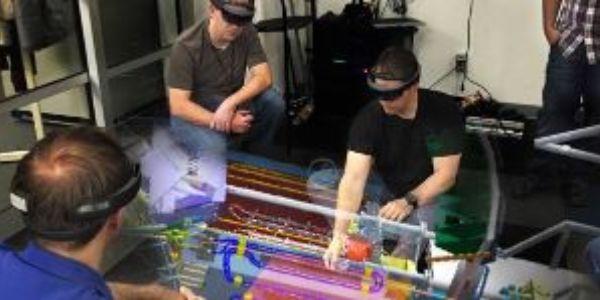

Nuernberger, a mixed reality researcher and developer who guides the Jet Propulsion Laboratory’s (JPL’s) related software architecture design and implementation, explains that ProtoSpace allows scientists to see 3D CAD models of the spacecraft through augmented reality. To use ProtoSpace, researchers employ the Microsoft HoloLens, a commercial off-the-shelf mixed reality head-mounted display device combined with the JPL’s augmented reality application to superimpose a 3D digital image into the real world—as opposed to a virtual reality environment that creates an entire digital world.

“ProtoSpace has been used for various stages of the mission life cycle, all the way from the stage where you have an initial CAD design and you want to communicate that design to other people, to the early design stage and trying to figure out the design decisions, then to find the optimal design and to validate those designs, and for planning and troubleshooting,” Nuernberger notes.

“It’s really great because all the different scientists and engineers can collaborate and see this spacecraft spatially, stereoscopically, floating in a conference room, or maybe overlaid onto the actual hardware in a clean room. It allows them to talk about it as if it was physically there.”

In February, NASA deployed Perseverance to Mars for two Earth years to search for signs of ancient life, collect rock and soil samples, demonstrate technology and advance the possibility of human exploration on the planet. In April and May, NASA conducted several successful demonstration flights of Ingenuity, first verifying that powered, controlled flight was even possible on the Red Planet, and later testing how aerial reconnaissance and other tasks could aid in the exploration of Mars.

Nuernberger shares that ProtoSpace was a key tool in helping scientists design both the rover and the helicopter. It also assisted them in figuring out where to stow the helicopter on the rover—attached to the belly of the robot—so that the helicopter could reach the planet safely without damaging itself or the rover.

Scientists relied on the mixed reality capability to examine possible problems the rover and helicopter might have encountered during the crucial stages of entry, descent and landing on Mars. In addition, to enable Perseverance’s mission of collecting rock and soil samples from the Mars surface, scientists used ProtoSpace beforehand to test the rover’s abilities to drill into rocks.

To explain Perseverance’s power system, the JPL again applied the mixed reality solution. Using a version of ProtoSpace’s 3D viewer software, Nuernberger and his team provided an interactive web-based application to explain the complexities of the rover’s Multi-Mission Radioisotope Power System.

The versatility of the augmented reality platform has also been put to the test on the International Space Station (ISS), in this case to apply the use of procedural guidance in an operational setting. Astronauts on the ISS conduct experiments and research quantum physics in the Cold Atom Lab. Nuernberger and fellow researchers Adam Braly and So Young Kim proved how the use of augmented reality-based instructions to perform procedural tasks on spaceflight hardware in the lab through the ProtoSpace application resulted in faster task completion and lower mental and temporal loads for astronauts—at almost a 20 percent decrease. The research, published in 2019, won the Human Factors and Ergonomics Society’s Human Factors award, which annually recognizes top efforts to advance ergonomics and human abilities in the design of systems, organizations, jobs, machines, tools and products.

More recently, for NASA’s Extreme Environment Mission Operations project, known as NEEMO, JPL researchers applied the mixed reality solution to an ocean environment. Off the coast of the Florida Keys, astronauts and scientists train, live and work underwater in the Aquarius research facility to prepare for living in space on the ISS. “They also tested this technology in the undersea habitat, and they lived there for two weeks,” Nuernberger shares. “We had a procedure with about 20 steps or so across different task areas, and they showed the feasibility of using this technology for procedural type work. In such an extreme environment, you’re not sure if this technology will stand up but it performed pretty well.”

Given the benefits of reducing errors, task duration and astronaut fatigue, NASA JPL will continue to focus on applying ProtoSpace to improve astronaut procedures and guidance, Nuernberger confirms.

JPL researchers also turned to the ProtoSpace platform during the pandemic. In a project called Vital, they created—in about 30 days—an emergency-use ventilator for COVID patients, Nuernberger explains. “Our work in the Vital project was to create an interactive 3D website to show assembly instructions and operation instructions,” he says. “We used our software to help author step-by-step procedures showing how to assemble these ventilators, so that licensees across the whole world, licensees could build these ventilators for COVID patients.”

Stakeholders manufacturing any type of device or complicated spacecraft are empowered by the augmented reality application’s ability to display a prototype, even if the parties are dispersed geographically. For inside clean room settings, the platform also allows researchers outside of that strict environment to interact digitally with what is being manufactured.

When applied during spacecraft assembly, ProtoSpace also enables scientists to evaluate associated technology, Nuernberger continues. “When assembling spacecraft, sometimes we buy ground support equipment and before actually purchasing it, it is nice to evaluate it and see if that ground support equipment will actually fit where we want it to fit,” he states. “When you see this all together in augmented reality, rather than just viewing it on a 2D screen, it makes is so much easier to evaluate.”

Moreover, physical hardware can be applied to the digital version of whatever is being designed or built—a crucial solution for users to check the clearance of equipment or tools. “Sometimes engineers come in with actual physical tools,” he continues. “They will see the digital model and then they will put the physical tool up to it and then they’ll see, ‘Do I have the clearance? Can I put my hand here without touching other sensitive parts of the physical hardware?’ At least in one case, early on in the design process, they realized that some part of it is not going to be in the right spot, so they have actually updated the design of the spacecraft because it was going to be very hard to assemble if they kept the design like that.”

The software environment for the ProtoSpace platform is a C++ pipeline that can import CAD models to be viewed on the HoloLens, Nuernberger explains. “The software architecture basically takes the CAD model, in CAD model format, and we import it into our proprietary format,” he states.

Similar to mobile phones or tablets, the mixed reality headsets do not have a lot of processing power, he explains. “One thing our software does is it allows you to interactively take these really big, complicated CAD models that are gigabytes, hundreds of megabytes, and we interactively optimize it so that it can be viewed on the HoloLens,” he shares. “That’s one part to our software. Once it’s optimized for rendering, then you actually can view it on the device, so that’s what you see. That is another part of our software.”

The importation process to the HoloLens device allows scientists to tailor what they want to show or to work on collaboratively, Nuernberger continues. “As part of this importation process, the user has the chance to interactively choose which parts of the models he or she wants to focus on,” he says. “Maybe some parts of the model they don’t care about. Sometimes they wanted to see the entire model and they will for example, remove little nuts and bolts or fasteners on the spacecraft that they don’t really care about. Then they will optimize it and then it’s in our proprietary format. This model is downloaded from our server onto each headset, each HoloLens device. And then it’s rendered locally on the device. There is no remote rendering on the cloud. It’s all rendered locally on the HoloLens headset in real time.”

In addition, the software captures any hand movements, enabling a user to interact with the CAD model. They can visualize a spacecraft, and move, rotate and scale it to get it in different positions, he notes. Because scientists do not have to try to explain the intricacies of a spacecraft’s design using traditional methods, such as from a slideshow, “it allows them to reduce the cognitive load of trying to understand inherently spatial things like this.”

Nuernberger acknowledges that it is a little more challenging to test the augmented reality software. “It’s not just tested on a laptop or tablet, which are readily available,” he says. “For us, we’re using these mixed reality headsets so it’s a little harder to test and automate the testing. When you find a bug, and then to ensure that bug has been fixed and to automate that, it is a little harder.”

Nuernberger and his team will continue to update the ProtoSpace platform, advance its mixed reality capabilities and apply its use to NASA’s missions, aiding both astronauts and the advancement of space vehicles and aircraft. And the augmented reality platform’s greatest asset will continue to be its powerful collaboration properties, he says.

“The collaboration aspect of ProtoSpace is really cool because you hear anecdotes such as, ‘We were trying to solve some problem over email or through sharing PowerPoint slides for several weeks or months, and then right when we put on the headsets, we almost immediately knew what the solution was,’” Nuernberger notes. “The inherent nature of this 3D CAD model is the 3D spatial aspect. To see a model on the computer screen is OK and, in some cases, it works, but to see it in 3D, in a collaborative fashion, where everyone can see the same thing, in the same position, in the same spot, makes it so much easier to communicate and refer to things. And to be able to see it full scale, to really understand how big some of these spacecraft are, that’s crucial.”

Comments