Convergence of the Physical and Digital Worlds

Researchers envision a day when shape-shifting materials, novel sensors and other interactive technologies replace the flat, insipid computer screen. Such advances will allow users to interact in a tactile manner, enhancing their understanding of information and data. Researchers on the cutting edge of human-computer interaction are working on physical representations of data or information. Computer scientists portend that computers can, and should, have an output of information that mirrors the adroitness and expressiveness of the human body.

One researcher who employs that theory is Daniel Leithinger, assistant professor at the ATLAS Institute and the Department of Computer Science at the University of Colorado Boulder. He suggests that harnessing physical movements as part of computing is the next step in the evolution of electronic processing and human interaction.

“It is digital clay, powered by computer design that incorporates and communicates human movement, expressions and dexterity,” the professor says. “These interfaces allow users to touch, grasp and deform data physically.”

Leithinger, who is also the director of the lab known as Transformative Human Interfaces for the Next Generation, or THING—where researchers consider shape-changing materials, new sensors and unique design methods to make digital information tangible—contends that the ability to render information physically through a digital format can help computer-dependent humans understand it better. “It can change the environment around us and improve expressivity as well as understanding,” he says.

The professor’s work over the last 10 to 15 years—including at the Massachusetts Institute of Technology’s (MIT’s) Media Lab, where he received a doctorate in 2015—has focused on advanced computer interfaces and what he refers to as shape displays, or physical shape-changing user interfaces connected to a computer.

Although industry offerings in virtual reality have expanded the interaction between humans and the physical world, the experience can be improved, Leithinger suggests. “So while the head-mounted displays right now have sensors that are amazing, and I can gesture anywhere in the room and the system will take care of sensing that information, I can’t really feel the content. There’s always a lack of touch,” he says.

Shape displays fixed with computer interfaces, such as the early units he and researchers developed at the Media Lab, are meant to bring computing out of the almost ubiquitous flat displays and into the real world, Leithinger states.

“I think the way we use computers right now is kind of absurd,” Leithinger declares. “We are only using our vision and auditory perception as our primary ways of getting information. And we are sitting at computers eight hours a day. We are hunched over when we don’t need to be, and it just deprives our bodies of all the amazing capabilities that are inherent to us.”

Haptic interfaces do provide a tangible connection to digital information. Most people are familiar with at least simple haptic interfaces, such as the vibrating alarm built into smartphones, the professor notes. “Historically speaking, one of the first applications for haptics was in airplanes to give pilots the feedback from the wind through the rudder,” he says. “That proved to be crucial for being able to successfully fly the plane, to get that information.”

Leithinger says he thinks that type of prompting application is relevant again, especially given the move to more human interaction with autonomous systems, such as with self-driving cars. “If we don’t provide enough visceral feedback in the autonomous systems that we interact with, the human in the loop just checks out, and it shifts from a collaboration with the system to taking a back seat and a tendency to ignore what is happening,” he warns.

Haptics could give humans an understanding of what a system is up to with a message that is not as aggressive as a flashing light or a blaring siren. That kind of interface also could give humans a fast way of intervening if necessary.

The professor expects to see a lot of innovation in haptic interfaces in the near future—“beyond the vibratory ones that we have right now.” This could include tactile, kinesthetic and proprioceptive interface developments, in which perceptions of touch, body movements or physical activity, and the relative position of users’ bodies, respectively, all play a role.

To achieve that progress, interface development needs to converge with material science. Research is critical, especially into soft materials—components that can change shape or change stiffness or move autonomously, Leithinger opines. The traditional approach of robotics-driven haptic interfaces, while successful in special cases, for medicine or telerobotics, “can only get us so far,” he says. “The combination of human-computer interaction and material science, that is for me the most exciting, and it pretty much embodies where I think computing interfaces are going to go. It is the next frontier, which will not only bring sensing into the real world but also communication output. For that to happen, though, we need new materials if we want to go beyond visual information.”

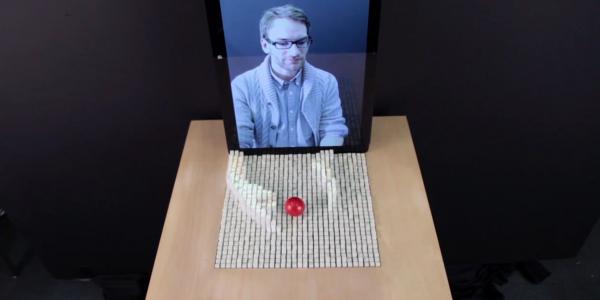

Researchers channeling innovations in interfaces and material science, however, need to harness advancements in prototype development and be able to prototype quickly. Leithinger came to this conclusion at the MIT Media Lab when working on the inFORM project, a very complex electromechanical assembly. InFORM is a dynamic shape display that can render 3D content physically so users can interact with digital information in a tangible way, Leithinger says. The inFORM device also can interact with the physical world around it. For example, it can move objects on the surface of the device’s table. In another application, the device allows remote participants in a videoconference to be displayed three-dimensionally, which enables a strong sense of presence and the ability to interact physically at a distance, the professor explains.

“The problem with [inFORM] was that you had to take a lot of bets on how useful it was and what applications you could use it for before you could actually test it, because it took a long time and was expensive to build,” he says.

Although it is common to have many applications or ways to prototype and test software, for physical interfaces, it is not. “In software engineering, there’s a lot of really wonderful, well-established methods to test interfaces before you need to program them,” Leithinger continues. “You can sketch a user interface on paper and present them for users and walk through with them. You can have things like Wizard of Oz prototyping, where you don’t need to program the whole functionality. For physical interfaces, we don’t really have any good tools for that. So what we are looking at now are ways of building prototyping environments that will allow us to test much, much quicker what the experience will be.”

For one research project at ATLAS, Leithinger and his students are sketching interfaces with inexpensive materials such as cardboard and found objects. “Then we are putting people in virtual reality, where they can both feel those objects and have a visual representation,” he notes. “And then humans can actually move the objects, do interactions and test them.”

Leithinger confirms that it is preliminary work, “but we’ve had some pretty encouraging results, and I think that it’s going to be a really interesting and hopefully extremely useful in the future.”

In another Media Lab project, Programmable Droplets for Interaction, for which Leithinger played an advisory role, Udayan Umapathi, CEO of Droplet.IO Inc., and Patrick Shin, Ken Nakagaki and Hiroshi Ishii used a so-called electrowetting technology to move and control water droplets. Using an array of electrodes, the researchers programmed the movement of droplets of water across an electronic board. “We were looking into how the droplets of water could be used as an information display,” Leithinger says.

The researchers presented the study in April at the Association for Computing Machinery’s (ACM’s) CHI 2018: ACM Conference on Human Factors in Computing Systems. The Media Lab reported in July that the group received the organization’s Golden Mouse Award for its breakthrough research. “It’s an awesome project to simplify how droplets can be manipulated and mixed for biology lab experiments, among other applications,” he relays.

The professor opines that with the convergence of the digital and the physical worlds, whether through droplet manipulations, shape displays or other physical representations, humans will have much better opportunities to collaborate with each other and share information as well as to experience information tangibly.

“It’s pretty much everyone I speak to, whether it’s my parents who rarely use computers to my colleagues who use them extensively every day, all thinking that there must be a better way of experiencing information than the current way. We need to rethink how we can wear interfaces or have objects that can take on forms and output information,” Leithinger advises.

Comments