NASA Leverages Video Game Technology for Robots and Rovers

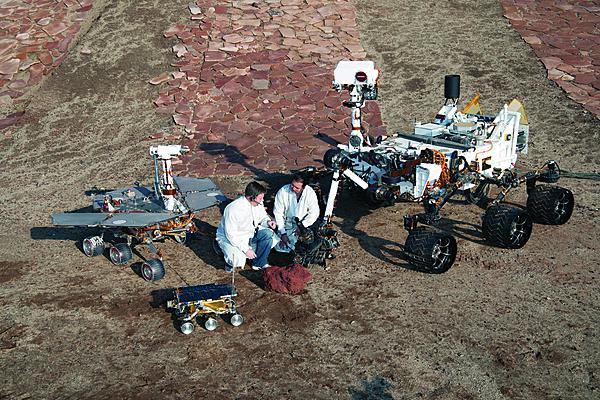

Earthbound technologies and computer programming that make most popular video games possible are driving development of the remote-controlled robots now in use by NASA in the unmanned exploration of Mars and the solar system. Those improvements in both hardware and software also spur innovation in the next generation of robots envisioned for use by government and industry. That is important because NASA recently has proposed a new, multiyear program of sending robot explorers to Mars, culminating in the launch of another large scientific rover in the year 2020.

“The technologies and the software that the video game industry has developed for rendering data, scenes, terrain—many of the same visualization techniques and technologies are infiltrating into the kinds of software that we use for controlling spacecraft,” according to Jeff Norris, manager of the Planning and Execution Systems Section with NASA’S Jet Propulsion Laboratory (JPL) in Pasadena, California. In a similar way, joysticks and gaming consoles such as the Microsoft XBox Kinect are examples of gaming technology hardware that have functional analogues in the systems used to control robotic spacecraft.

At its most basic, conceptual level, human control of a robot is about making the device act as a surrogate for a person, something Norris believes is not that dissimilar to a person using his or her avatar to defeat an enemy in a video game. Whether the robot is 55 million miles away exploring the surface of Mars, or in the next room, one important concept to remember is that when people are controlling a robot, “you’re trying to put them in touch with the state of the robot and its environment so they can make great decisions,” explains Norris. As a top robotics expert, Norris helped to build and program the Mars robot Curiosity, also referred to informally as a rover. Those decisions then are reflected in the subsequent actions of the robot, whether that is a new set of movements or commands to sample soil from a new location. The goal, he says, is to give the human controller some sense of the robot’s environment, to make the robot an extension of the controller’s body and to make the robot respond to his or her commands. The challenge is to be able to put the right information in front of the human in a timely manner and then to have the human’s commands accurately reflected in the subsequent actions of the robot.

An important goal for the video game designer is to achieve fidelity, which is explained as giving the game player as realistic an experience as possible. This extends to sight through photo-realistic images on a computer screen; to surround sound systems best heard on high-fidelity speakers or headphones; and even to high-end hand controllers that provide tactile and haptic feedback synchronized with the events in sequence with the game. For different reasons, fidelity is just as important for the system used to control a robot, but the importance fluctuates depending on the situation and context in which the robot and its human controller find themselves. “We not only have to accurately represent what we know about the robot and its environment but also have to accurately represent what we don’t know,” he explains.

As an example, Norris suggests a scenario in which a Mars robot relays back to Earth the shape of the ground in front of it, but its sensors are unable to capture other important data, such as the composition of the soil or the presence of water. In a video game, a programmer might include additional data to enhance the fidelity of that scene for the game player. In the case of the Mars robot, however, “the place where you were unable to gather data might be hazardous; it might have a hole or a rock that you don’t want to drive into,” he explains. As future robot explorers are designed and programmed, it is important that a “higher standard for fidelity and realism” be built into the systems, and NASA scientists have much to learn from the gaming industry when it comes to building more realism into robotic control systems, Norris acknowledges.

Asked to elaborate on what factors might contribute to success in the area of improved fidelity in robotic control systems, Norris points to advances in graphics performance through faster video processing chips, a phenomenon driven for the most part by the gaming community and gamers’ appetite for more and more realistic video experiences. “We just ride that wave and make use of their advances, and happily so,” he acknowledges. In addition, advances in graphics software and video game engines, borrowed in part from the movie industry, have resulted in highly realistic three-dimensional displays that have also been borrowed by scientists designing robotic systems. “Games push the envelope on how we’re going to render a landscape effectively so that it looks right,” Norris explains.

When it comes to controlling NASA’s Mars Curiosity robot—a car-sized science platform with six wheels and a variety of cameras and sensors deployed on booms extending from the vehicle—the joysticks and keyboards found in the JPL control room are very similar to what gamers would use. Norris explains that NASA capitalized on the “many, many millions of dollars that the companies making these joysticks and other input devices have invested into industrial design and user testing to build devices that are comfortable to hold for long periods of time. We would be foolish in my business to ignore all of that progress.”

Perhaps the most challenging part of operating NASA’s Curiosity robot from Earth is compensating for both the distance and the time separating the robot from its human masters. Robotics experts condense this challenge into the word “latency,” described in engineering terms as the time delay experienced by a system processing data. It is something also faced by local police bomb squads who use robots to disarm possible explosives, scientists using ocean-going autonomous robots in maritime research, and pilots at U.S. military bases operating unmanned aerial vehicles in Iraq and Afghanistan.

Norris notes that a common complaint among gamers who participate in massive multiplayer games on a server over a network is that of latency, when the performance of the game begins to deteriorate in relation to the performance of the network, and the synchronization of the state of the game. Compare that to a latency of 10 to 20 minutes, accounting for a distance of between 50 million and 400 million miles, depending on the position of Earth and Mars as they orbit the Sun. “Because of that, we do not, and cannot, control the Mars rover in real time with a joystick or a gaming device. One of the ways you deal with latency in a system like this is by giving the system a longer list of things to accomplish,” Norris explains.

For example, NASA’s Opportunity rover, which has been exploring Mars for several years now, is usually given between one and three days worth of commands to perform autonomously. “At the end of that, it communicates with us, and reports on what happened when it ran the last commands, its current status, the condition of its batteries and then asks for its next instructions. We look at the results, and then give it a new set of commands.” In part, because of what NASA has learned from Opportunity and its twin, Spirit, Curiosity is able to get new commands almost daily and send back its results within a day. This, Norris says, allows controllers to run scientific experiments that last for more than one or two days.

In another way, Norris says, video game technology also gives robotics controllers an edge in overcoming the effects of latency in controlling robots on an interplanetary basis. “We can still build virtual three-dimensional environments in a virtual model to map out what the rover is going to do, and the output of that planning activity is a set of commands that goes to the rover and is executed over the course of several days.”

One tool to battle the effects of latency is to give the robots a degree of autonomy, the ability to act independently when needed. “The Mars rover is capable of driving and avoiding obstacles on its own for a period of time, it’s capable of managing its own life functions, making sure that its battery has sufficient charge, protecting itself from major problems like driving into a major hole or off a ledge. It can stop itself,” Norris explains.

Comments