Researchers Adopt a New Perspective on Extended Reality

Imagery is yielding to meaning as extended reality heads down a new path of evolution. Where developers traditionally have concentrated on improving graphics to the point of realism, they now are shifting their focus to a different kind of realism that emphasizes meaning over appearance.

This approach is opening new doors for applications of extended reality, also known as XR. Uses such as automated driving, design for manufacturing, augmented reality and firefighting assistance already are growing in popularity and effectiveness, and varieties of those applications are on the horizon. In a few years, XR may be able to aid fighter pilots and the vision impaired.

William Bernstein, project leader, Extended Digital Thread Project, Systems Integration Division, National Institute of Standards and Technology (NIST), describes how functional realism already has supplanted photo realism as the most important goal of an XR application. He analogizes this comparison to a Hollywood movie that has striking computer-generated imagery dominating a thin plot versus a crudely drawn animated feature that goes deep into the human condition. XR is placing less emphasis on the superficial but spectacular graphics in favor of the animation with deeper meaning.

“Functional realism should be the goal of any [XR] researcher,” he declares.

Simply showing a realistic image does not go as far in exploiting the potential of XR. Instead, portraying the location and function of aspects or entities in a scene—“a functional characteristic of the scene,” Bernstein elaborates.

“We should test the limits of our own sensing capabilities, whether we’re able to sense something without seeing it … or even infer some sense capability,” he says. “We could do a lot in terms of extending our reality from just displays. We’re reaching a point where the quality of a display could be considered diminishing returns. There might be some other valuable assets to expand our horizons in the world of XR.”

That is apparent when XR is applied to critical or dangerous environments such as surgery or welding. Getting the function right is much more important than the photorealism, he says. For example, a study on XR-assisted surgery revealed that creating realistic-looking virtual body organs did not help as much as establishing the proper location for achieving tasks better for the surgeon. As obvious as this may seem, only recently has photorealism begun to take a back seat.

Another important trend, which goes into an entirely new area, involves extending human senses. One XR team has discovered vestigial structures in the back of cones in human retinas that serve an undiscovered role. While most people cannot see at a greater peripheral range than 105 degrees, preliminary tests have indicated that some people can detect objects far beyond that range. These vestigial structures in the eye cones are the source of this ability, and scientists are working to take advantage of these extended senses, Bernstein says.

Technologies might mimic or complement these senses for people who have lost other mundane sensing functions, such as those suffering from impaired eyesight. Fighter pilots might have blinking lights in their headrests that would not distract them but guide them in their decision-making in air combat.

The path to these breakthroughs goes through near-term developments that will support future applications. Bernstein relates that some applications already have taken hold, such as autonomous driving vehicles and assisted driving.

The NIST XR Community of Interest (XRCOI) formerly was the NIST Virtual Reality (VR) COI as recently as 10 years ago. Bernstein relates that the COI was reinvigorated about two years ago and comprises like-minded NIST researchers using or leveraging XR technologies to solve specific use case or domain problems. He emphasizes that while some people focus on XR as a whole, most specialize in a particular domain.

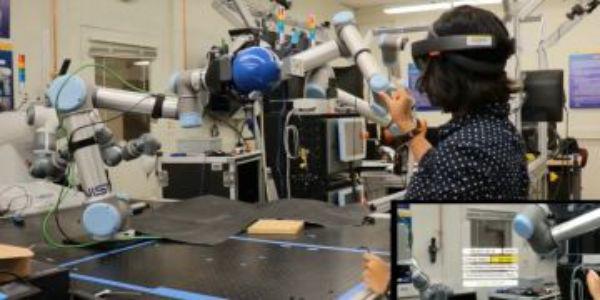

These domains reside in focus areas in NIST, Bernstein explains. For XR, the focus cuts across four main application areas: human robotics interaction; smart manufacturing; public safety; and fire research. These have multiple subtopics, he says.

Some applications are at hand. For example, law enforcement attending to a car on the side of a road must engage in person-to-person conversation and then turn to a laptop affixed to the officer’s vehicle. Each of these interactions offers the potential for introducing errors into the incident. But in cases where a police officer simply exits his or her car and relies on a computer vision-equipped camera for collecting and relaying information about the other vehicle, the officer’s efficacy improves and threats to safety are reduced. By matching basic data with database information, an XR system can limit human error and improve the likelihood of a safe outcome, Bernstein says.

Another key area is firefighting. Augmented reality traditionally has been associated with location-based services because of the importance assigned to placing digital objects appropriately into a physical space, he allows. “Obviously, it makes it very difficult to properly place data in a building that’s burning and crumbling to the ground,” he points out. Researchers are exploring several approaches to solving that problem, although much work remains to be done.

NIST long has been involved with experimental conflagration, Bernstein relates. Understanding the physics of fire is the focus of a laboratory that builds three-story structures only to set them ablaze to see how the fire progresses, both internally and structurally. This effort also studies wildfires. Bernstein emphasizes that this research focuses on how a fire spreads, not how to interact with it.

One tool for this research is a 360-degree camera encapsulated in a water-cooled glass globe. This construct can be placed within a real fire and record how a room burns. A web application allows generation of XR scenes on a browser in which elements are spatially registered as points of interest and placed directly on the video of the fire. A researcher can view the fire spread of a typical living room, for example, and gain insight into risk mitigation.

For on-site work, firefighters can explore an existing virtual model before they enter a burning building, he notes. Some work aims to augment the firefighter’s helmet with displays showing vital information, such as temperature and carbon monoxide levels, directly on a helmet viewplate without impeding the view of the firefighter.

With manufacturing, two primary use cases dominate XR for large industrial programs: assisted maintenance; and training. In training, XR can help instruct assemblers to put together complex products. For maintenance, studies have shown massive gains from XR for field maintenance, Bernstein says. These gains encompass proficiency as well as safety. For example, an electrical engineer about to work on a transformer must be certain his movements do not bring him into contact with high voltage connections. Training with XR can help engineers understand the potential risks in a given situation.

Bernstein explains that the NIST robotics and manufacturing programs are intertwined. One aspect of robotics research at NIST involves automated guided vehicles (AGVs), which are mobile robots popular in warehouses for picking. The most critical aspect of AGV operation is to properly report location, he states, and experts have undertaken significant standards development for testing how accurately AGVs report their locations.

For example, if an AGV with a robotic arm doesn’t know its precise location, then manipulating that robot to pick a fine part off a table will be difficult, he points out. With XR, having a holistic vision of a warehouse or factory embedded with AGVs requires a clear sense of their locations at the risk of a number of safety hazards.

Then comes training robots remotely, and XR can play an even greater role here, Bernstein suggests. One issue involves digital twinning of the robots. The lack of a clear and rapidly updating view of the robot in the virtual world makes it difficult to use XR technologies, and this problem becomes more acute for training fine movements of two-armed robots, he notes. Researchers at NIST are looking at interfaces and proxies for training robots, he adds.

But achieving the full potential of XR will require “a coalescence of standards,” Bernstein offers. This coalescence would involve the XR community, which already has significant advancements in standards, as well as for potential users. The community has established standards for digital representations, spatial placement of objects within scenes and the presentation of scenes on browsers.

In using XR for industry, the application domains and the XR domain lack a clear coherence from data standards, he adds. Researchers in smart manufacturing have been working on standards that define products, manufacturing situations and sustainment and inspection. These standards, although quite mature, are difficult to harmonize, Bernstein says. This harmonization challenge has been identified and people are working on it. Yet, this work lacks the necessary cohesive vision that must come from a group comprising domain experts, subject matter experts and developers working on “the next great idea in XR,” he states. “There are many places we can go in the next 10 years. But if there is not a clear cohesive vision and a coalescence around that concept of connecting XR standards with domain standards, we’ll just be working on that for the next 10 years.”

If this standards challenge is solved quickly, then several advances loom. One Bernstein cites is on-demand scene creation. Today it takes a full team of computer scientists and application domain experts to form a pipeline to generate a useful XR scene for a particular task. Instead, the standards coalescence will allow the ability to translate a fully functional XR scene to a different application, he predicts, adding that this is essential to push industrial XR forward.

Complementing this requirement is the need for an industrial XR testbed. Bernstein says that this testbed would bring together academia, government and industry to work on XR. He doesn’t know who would create it, but it would open the door to development of baseline understanding of the challenges across different use cases, he says. It also would help startup XR companies focus on likely growth areas in the dynamic technology area.

“Many of the challenges for particular domains are very similar to others, and we’ve noticed that very quickly in the XRCOI,” he observes. In the same vein as the COI meetings, having a central location for people to test similar capabilities would boost XR progress.

Comments