U.S. Government Bets Big on Data

A multi-agency big data initiative offers an array of national advantages.

U.S. government agencies will award a flurry of contracts in the coming months under the Big Data Research and Development Initiative, a massive undertaking involving multiple agencies and $200 million in commitments. The initiative is designed to unleash the power of the extensive amounts of data generated on a daily basis. The ultimate benefit, experts say, could transform scientific research, lead to the development of new commercial technologies, boost the economy and improve education, all of which makes the United States more competitive with other nations and enhances national security.

Big data is defined as datasets too large for typical database software tools to capture, store, manage and analyze. Experts estimate that in 2013, 3.6 zettabytes of data will be created, and the amount doubles every two years. A zettabyte is equal to 1 billion terabytes, and a terabyte is equal to 1 trillion bytes.

When the initiative was announced March 29, 2012, John Holdren, assistant to the president and director of the White House Office of Science and Technology Policy, compared it to government investments in information technology that led to advances in supercomputing and the creation of the Internet. The initiative promises to transform the ability to use big data for scientific discovery, environmental and biomedical research, education and national security, Holdren says.

Currently, much of generated data is available only to a select few. “Data are sitting out there in labs—in tens of thousands of labs across the country, and only the person who developed the database in that lab can actually access the data,” says Suzanne Iacono, deputy assistant director for the National Science Foundation (NSF) Directorate for Computer and Information Science and Engineering.

The NSF leads the government effort, and Iacono also serves as the co-chair of the Big Data Senior Steering Group created by the White House Office of Science and Technology Policy.

“‘Big data’ is an unfortunate buzzword because it just focuses on one aspect of what we’re truly working on. It focuses on the volume of data. The heterogeneity of data is probably an even bigger problem. How you interoperate, how you federate all of these datasets that currently exist, all in their own silos, is just a huge untapped resource. If we could figure out how to do that, it would be great for science, for education, for economic prosperity and for the health and well-being of the country,” Iacono declares.

Iacono predicts that the sharing of information will lead to major benefits. “We truly believe that there is this promise of transforming science and engineering. We’re moving from an era of hypothesis-driven discovery to data-driven discovery,” she states.

Typically, a scientist begins with a theory and then experiments and gathers information to test the assumption; but with data mining, scientists can form the initial theory based on the vast amounts of data available across disciplines, Iacono explains. She cites the example of Walmart, which mined its own transaction data to discover that beer sells better when placed near diapers. “That is not something that a scientist probably would have hypothesized because they seem so disparate. Those two things seem conflictual in some ways. But now we can have all of this data available to us, so it puts us at a higher level, a higher starting point. Now that I can mine the data and see some patterns that I would not have expected, I can start hypothesizing about what’s going on.”

For about a year before the initiative was unveiled, the senior steering group was hammering out a big data national strategy and plan. The strategy consists of four parts: basic science and engineering; cyber infrastructure development, which will give big data scientists and engineers the tools and techniques they need; education and work force development; and competitions and prizes, which are designed to expand the pool beyond researchers who normally would submit proposals. “What we’re doing with the solicitation is developing the new algorithms and the new techniques that will be used by industry five or 10 years down the road. These are really out there, frontier-thinking kinds of ideas that no one is using today. We believe there will be startups and patents and all kinds of good things that will allow industry to benefit from thinking about their data as a resource and really using it.”

To boost basic science and engineering, the NSF is teamed with the National Institutes of Health (NIH) to award a series of contracts and grants designed to develop new tools and methods that will take advantage of large dataset collections, accelerating progress in science and engineering research and innovation.

They received more than 600 proposals to a solicitation announced the same day the White House unveiled the governmentwide initiative. “We were asking for two types of proposals. One is small proposals, meaning smaller amounts of money because there are just one or two investigators involved. Then we have mid-scale projects, which are more team-oriented, more interdisciplinary, so there might be four or five researchers working together from different disciplines,” Iacono explains.

The first eight awardees were announced in early October. “What we’re doing now is putting all the small proposals through a rigorous merit review process. We expect we’ll be able to announce the awards for the small competition sometime late winter or early spring,” she adds. “We had dedicated up to $25 million total over the end of fiscal year 2012 and fiscal year 2013 for the whole kit and caboodle in response to that solicitation.”

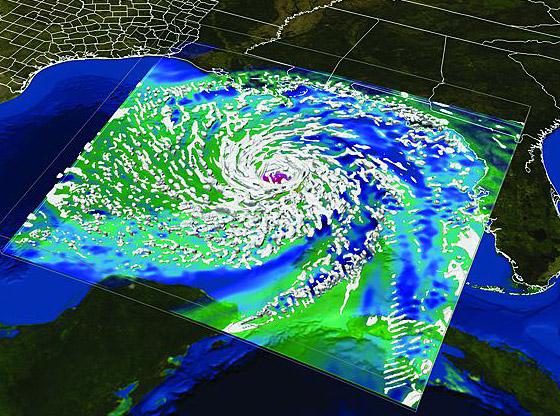

For cyber infrastructure development, the NSF has launched EarthCube, an effort to foster greater collaboration among the geographical, biological and cybersciences. “They’re taking a community-driven, bottom-up approach to trying to federate their databases that are now kind of siloed and stand-alone across broader areas of research so that they can ask bigger research questions,” Iacono reports. EarthCube seeks transformative concepts and approaches to create integrated data management infrastructures across the geosciences. The program aims to increase the productivity and capability of researchers and educators working at the frontiers of earth science.

Regarding education, Iacono explains that online college and university courses offer reams of information about how students learn. For example, schools can track who the students are talking to online, what resources they use the most and which resources they pass over. Mining that data could offer clues about science, technology, engineering and mathematics (STEM) education. “If we can better understand how people learn, it will really benefit this country when it’s dealing with STEM education. We think it’s a whole new era that’s possible because of this promise of big data,” Iacono declares.

To expand the pool of proposals, the NSF is working with NASA and the Department of Education on a series of big data challenges. Anyone can enter, and the ideas are the type that are written on the backs of napkins, Iacono says. The initial winners may be announced this month, and in the next leg of the competition, they will be required to demonstrate working code.

Participants in the governmentwide initiative include the departments of Defense, Homeland Security, Energy, Veterans Affairs, and Health and Human Services, along with the National Security Agency, NASA, the National Archives and Records Administration, National Endowment for the Humanities and the U.S. Geological Survey. All have their own programs and contracts as part of the national plan. “At the launch, we made the down payment. We said, this is what these agencies are planning, but that’s just the beginning of what we intend to do over the next few years,” Iacono says.

When it comes to big data, government and industry face the same challenges and can benefit from the same solutions, according to some experts. “Government is pressed with understanding the real business intelligence of mass amounts of data just as industry is pressed. The goal for government is not to require unique solutions but to apply the tools, techniques and technologies of commercial information technology to our problems. In that respect, the same solutions—with some tweaks here and there—should work for government and industry,” explains Jill Singer, chief information officer for the National Reconnaissance Office.

Singer co-authored a white paper on the intersection between big data and cloud computing for the AFCEA Cyber Committee. The other co-author is Jamie Dos Santos, a National Security Telecommunications Advisory Committee presidential appointee.

Singer and Dos Santos posit in the white paper that cloud computing is well-suited to help organizations deal with the vast amounts of data being generated daily. “This is a fantastic way in leveraging technology to deliver what the agencies need,” Dos Santos contends. “They’re going to have to go this way. They’re going to have to learn to deal with big data in cloud environments, whether they decide from a classified point of view to build the cloud environments themselves, or to partner with the private sector.”

The experts agree that the vast volume of data is not the only challenge. Training the work force to take advantage of big data also presents major hurdles. “If we do not train the work force, this opportunity will go by the wayside,” Iacono concludes.

Comments