Robots Can Learn by Playing Copycat with Humans

Robots may one day learn to perform complex tasks simply by watching humans accomplish those tasks. That ability will allow people without programming skills to teach artificial intelligence systems to conduct certain functions or missions.

Teaching artificial intelligence systems or robots usually requires software engineers. Those programmers normally interview domain experts on what they need the machines to do and then translate that information into programming language, explains Ankit Shah, a graduate student in the Department of Aeronautics and Astronautics (AeroAstro) and the Interactive Robotics Group at Massachusetts Institute of Technology (MIT).

Shah and his fellow MIT researchers, Shen Li and Julie Shah, who is also an associate professor, are researching ways to teach robots to perform tasks simply by observing humans do it first, essentially cutting out the software programmers as middlemen. Ideally, the domain experts would both teach the robots and then critique and correct any errors as they attempt to perform assignments.

“We want to empower the domain experts to directly program autonomous systems rather than have software engineers act as translators between experts and the systems,” Shah adds. “Currently whenever we want to deploy robots, we have this extensive elicitation phase where we try to talk with experts who want to see these systems deployed, get their opinions and then somehow the software engineer translates these opinions into code, but that results in a lot of mistakes and frequently, it is hard to come up with sound specifications.”

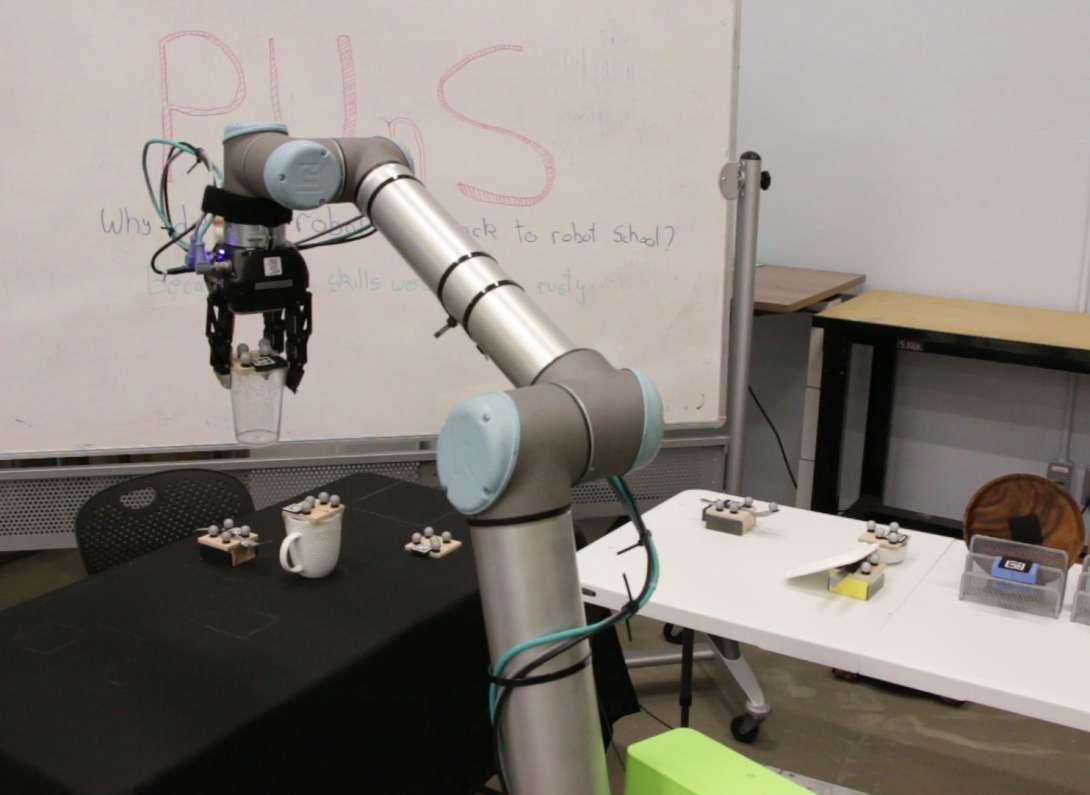

The group recently published research describing their methods for teaching a robotic arm to set a dinner table—a seemingly simple job for humans but one that requires a number of confusing rules and complex steps for a robot to understand and execute.

An MIT press release explains that the researchers compiled a data set with information about how eight objects—a mug, glass, spoon, fork, knife, dinner plate, small plate and bowl—could be placed on a table in various configurations. A UR10 robotic arm from Universal Robotics first observed human demonstrations of setting the table with the objects. Then, the researchers tasked the arm with automatically setting a table in a specific configuration in real-world experiments and in simulation.

Robots generally do well at planning tasks in situations with well-defined specifications that describe the function the robot needs to fulfill, considering its actions, environment and end goal. Without those well-defined specifications, though, they struggle.

Setting a table by watching humans do it presents a variety of uncertainties, the press release explains. Items must be placed in certain spots, depending on the menu and where guests are seated, and in certain orders, depending on an item’s immediate availability or social conventions. Present approaches to a robot’s planning are not capable of dealing with such uncertain specifications.

“It might seem simple, but when we’re learning by watching other people do it, we’re identifying segments in terms of breaking it down into the different objects that need to be placed, and we also recognize some of the other behaviors like ordering,” Shah notes. “We know that some objects that will be on the bottom need to be placed before the objects that will be stacked on top of them. These are the very type of temporal properties that we wanted to target.”

Rather than defined specifications, the researchers developed a software system known as PUnS, which stands for Planning With Uncertain Specifications. It is built on linear temporal logic (LTL), a computer language that enables robotic reasoning about current and future outcomes. According to the press release, the researchers defined templates in LTL that model various time-based conditions, such as what must happen now, what must eventually happen, and what must happen until something else occurs.

The system essentially allows the robot to hold a belief, such as in order to accomplish task A, property B must happen first. “The belief represents the probability that a particular candidate specification is the correct specification. Our system allows for the beliefs to be expressed as a probability distribution over logical formulas in LTL,” Shah says. “There is a family of properties for which if you want to verify a property, you have to look at the history of states that have been used to get to that, so that is a temporal element.”

To succeed, the robot had to weigh many possible placement orderings, even when items were purposely removed, stacked or hidden. The system made no mistakes over several real-world experiments, and only a handful of mistakes over tens of thousands of simulated test runs.

“We are trying to model the task as a belief for different tasks the robot can perform. As an example, if we have three specifications that say that the dinner plate needs to be placed before the small plate. That’s one of the formulas. A second formula is that the small plate should be placed before the bowl, and the third formula says that the dinner plate needs to be placed before the bowl,” Shah says. “None of these three are complete because the true specification is the dinner plate comes first, the small plate comes second, and the bowl comes last. But if you’re trying to satisfy all three together, you actually end up performing the correct specification. That is what the PUnS system is about.”

Shah says they chose setting a table because it was easy to find people capable of demonstrating the tasks. “We had to look for a task, which was simple enough that students would be familiar and could perform it correctly, but at the same time, it had to have the complexities that we wanted to model, especially the temporal complexities.”

He suggests the learning technique may be useful for a variety of activities or missions. “We’re looking at flight missions, and we were looking at disaster response scenarios and then simple robotics tasks, which involve multiple steps of manipulation, like setting a dinner table.”

The research on flight missions is still being reviewed, so he is not able to provide details. But he suggests that robotic systems used for manufacturing also might benefit. “PUnS enables us to consider tasks with specifications known only as beliefs and not marked with certainty. You can also think of manufacturing where you have to complete a product assembly, and there are multiple steps involved and multiple subcomponents. This would allow you to learn a policy that will necessarily complete all the subcomponents before you go ahead and finish the final assembly.”

Robots following defined routes present another possibility. “You may want to set a patrol route, which goes through certain waypoints before coming back home,” he offers.

It’s too early to know whether the learning technique might eventually be applied to robots on the battlefield. “It’s unclear right now because PUnS is only the specification part. What we’re trying to do is to expand the envelope of the tasks that can be presented in a way that can be planned by the robots, but rapidly planning for those tasks is still very much a robotics challenge,” Shah offers. “We don’t have very strong theoretical guarantees on whether we can complete any task within a certain amount of time, but right now, PUnS expands the ability to express or to encode more types of tasks.”

The next step might be to find ways for robots to modify or expand on their beliefs. Right now, the expert performs a task successfully “and then PUnS kicks in and uses that belief or specification to come up with the final policy.”

But there is no ideal way for the expert to modify the system’s behavior apart from providing more demonstrations. “So, one of the immediate next steps that we’ll be looking into is how we can … allow the robot to actually increase its certainty or its belief, to refine the belief. Refining specifications is something that I’m currently looking into,” Shah says.

Adding audio direction is another possibility. For example, the expert could demonstrate how to set one place at the table and then simply tell the robot to do the same thing for the other seats at the table.

Comments