How the Hashtag Is Changing Warfare

On the eve of last year’s U.S. presidential election, two computational social scientists from the University of Southern California published an alarming study that went largely unnoticed in the flood of election news. It found that for a month leading up to the November vote, a large portion of users on the social media platform Twitter might not have been human.

The users were social bots, or computer algorithms built to automatically produce content and interact with people on social media, emulating them and trying to alter their behavior. Bots are used to manipulate opinions and advance agendas—all part of the increasing weaponization of social media.

“Platforms like Twitter have been extensively praised for their contribution to democratization of discussions about policy, politics and social issues. However, many studies have also highlighted the perils associated with the abuse of these platforms. Manipulation of information and the spreading of misinformation and unverified information are among those risks,” write study authors Alessandro Bessi and Emilio Ferrara in First Monday, a peer-reviewed, open-access journal covering Internet research.

Analyzing bot activity leading up to the election, the researchers estimated that 400,000 bots were responsible for roughly 2.8 million tweets, or about one-fifth of the entire political conversation on Twitter weeks before Americans voted. People unwittingly retweeted bot tweets at the same rate that they interacted with humans, which quickly obfuscated the originator of the content, Bessi and Ferrara reported.

Social media manipulation is fast becoming a global problem. The Islamic State of Iraq and the Levant (ISIL) exploits Twitter to send its propaganda and messaging out to the world and radicalize followers. In Lithuania, the government fears that Russia is behind elaborate long-standing TV and social media campaigns that seek to rewrite history and justify the annexation of parts of the Baltic nation—much as it had done in Crimea.

Effective tactics to identify, counter and degrade such social media operations will not emerge from current U.S. military doctrine. Instead, they will come from journal articles on computational social science and technology blogs.

What we already know is that bots are quick, easy and inexpensive to create. All it takes is watching a free online tutorial to learn how to write the code or, alternatively, shelling out a little cash to buy some from a broker. Companies such as MonsterSocial sell bots for less than 30 cents a day. Even popularity is for sale: In 2014, $6,800 could buy a million Twitter followers, a million YouTube views and 20,000 likes on Facebook, according to a Forbes article.

Bots can be good and bad. Not all bots are devious, and not all posts are manipulative. Advanced bots harness artificial intelligence to post and repost relevant content or engage in conversations with people. Bots are present on all major social media platforms and often used in marketing campaigns to promote content. Many repost useful content to user accounts by searching the Internet deeper and faster than people can.

Now for some of the bad. Bots spoof geolocations to appear as if they are posting from real-world locations in real time. When users receive social media messages promising an increase in followers or an alluring photo—typically sent by someone with a friend connection—chances are it is the work of a bot. The improved algorithmic sophistication of bots makes it increasingly difficult for people to sort out fact from fiction. The technology is advancing at record speeds and outpacing the algorithms companies such as Twitter develop to fight it.

Reinforcements are on the way. Experts at the Defense Advanced Research Projects Agency (DARPA), Indiana University Bloomington and the University of Southern California are among those working quickly to develop better algorithms that identify malevolent bots. Solutions range from crowdsourcing to detecting nonhuman behavioral features or using graph-based methods such as those Ferrara and others review in the 2016 article “The Rise of Social Bots” for the publication Communications of the ACM. Although some attribution methods come with social media analytics packages, the lion’s share are open source and enacted in coding languages such as Python and R, which are free, open source coding packages that can ingest social media feeds for analysis.

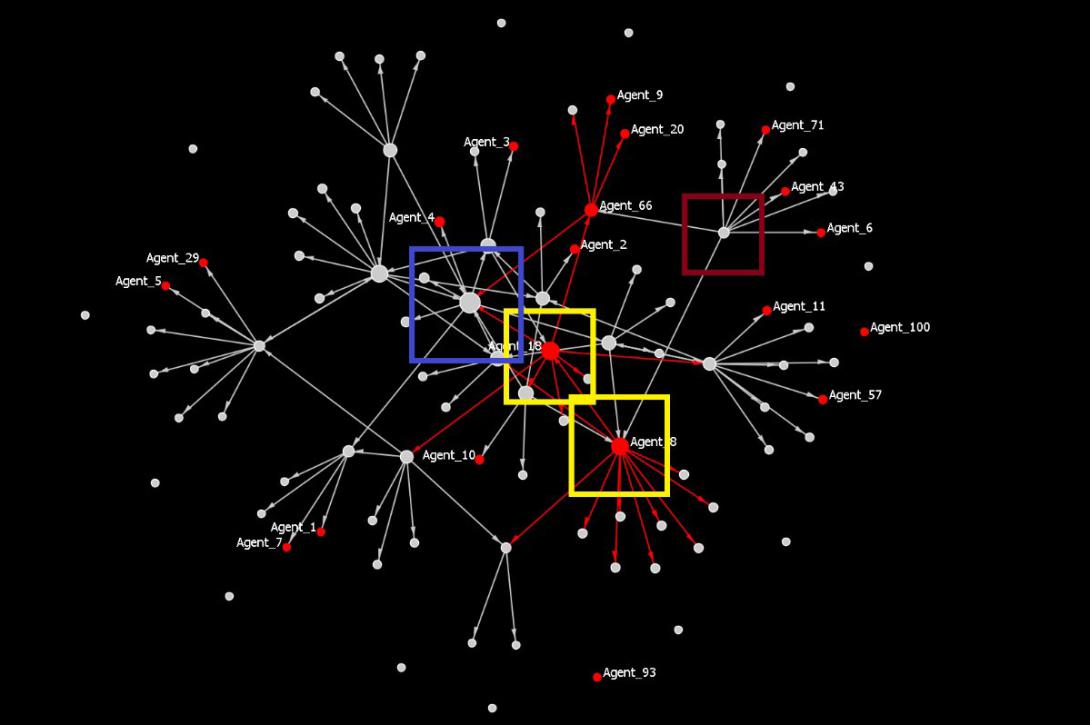

While identifying a fake account run by a bot is fairly easy, identifying its creator, its controller and its purpose is a real chore. A social science subfield called social network analysis (SNA) might offer fixes to this problem. SNA uses linear algebra and graph theory to quantify and map relational data. These methods can be used to determine whether bots are acting to elevate certain key actors in a network or aligning with certain human subgroups online. Tools or code that collects and creates large networks of interaction on social media platforms can be used to separate humans from bots and identify the causes bots aim to influence.

At the same time, action is needed to support the tactical level of using social media analytics and SNA to detect bots and enemy influence operations in the information environment.

Clearly, bad bots can eliminate the need for kinetic deterrence to coerce or manipulate. They can do the dirty work instead.

Identifying, countering and degrading bot armies that spread misinformation requires new tactics—battle-ready tactics. Advanced computational social science methods must be combined with social media and network analysis tools to wipe them out. If such measures were deployed in the months leading up to the November presidential election, the United States might know whether Russia meddled in the election. And knowing can be half the battle.

Adam B. Jonas is an intelligence analyst supporting the U.S. Army Training and Doctrine Command’s G-27 Network Engagement team. He also teaches advanced network analysis and targeting (ANAT) classes to Army officers. The views expressed are his own.

Comment

How the Hashtag is changing warfare - THE CYBEREDGE

While bots perform useful functions such as dissemination of news and publications and coordination of volunteer activities, some behave more like humans to spread fake news and promote terrorist propaganda and recruitment.

Thanks for recognizing importance of Computational Social Sci

Great article! You and others interested in the field may also be interested in the 4th annual International Conference on Computational Social Science (Ic2S2) to be held at the Kellogg School of Management at Northwestern University on July 12-18, 2018. kell.gg/hostic2s218 . Please join us!

Interesting article

This was very interesting. I'll be very curious to see the tactics employed to counter the bots. Nice job, Adam.

Comments