Next Level Human-Robot Interaction

Social by nature, humans interact in multiple ways—through voice, vision and touch. Reflecting these humanistic qualities, robotic capabilities are improving, and as such, human-robot interaction will feature more complex multimodal functions.

However, the vision for the future of robots, where they are accepted by human collaborators as equal members of a human-robot team, all striving toward a shared goal, is not quite a reality, researchers note.

First, akin to today’s apps on a cellphone, the user interface is key—and lacking.

“The design of multi-modal human-machine interfaces is critical to the successful design of a collaborative robot,” researchers said in the recent study, From Caged Robots to High-Fives in Robotics: Exploring The Paradigm Shift From Human-Robot Interaction to Human-Robot Teaming in Human-Machine Interfaces.

The study was published in the Journal of Manufacturing Systems, Volume 78, February 2025, Pages 1-25, by Filippo Sanfilippo and Mohammad Hamza Zafar, from the Department of Engineering Sciences at the University of Agder in Norway, and Timothy Wiley and Fabio Zambetta, from the School of Computing Technologies, Royal Melbourne Institute of Technology, Australia.

In addition, the feeling that robots are a tool to be used by humans—versus viewing robots as equal teammates—persists and must be overcome to improve their coexistence but also to optimize sophisticated co-operations.

At the center of the debate is the evolution from mere human-robot interaction to human-robot collaboration, ultimately evolving into true human-robot teaming, or full co-understanding, the researchers said.

“It is no surprise that the nature of how humans work collaboratively with other humans has a heavy influence on how humans collaborate with embodied robots, using similar forms of multimodal communication—audio, visual and physical,” the researchers noted.

Famed science fiction writer Isaac Asimov outlined several “laws” of robotics, including that a robot may not injure a human, or, through inaction, allow a human to come into harm. In present-day robotic development, human safety has long served as a guiding principle for the ethical operations of robots and fostering trust in the design of robot behaviors and interactions, the researchers stressed.

“Fundamentally, at all levels of autonomy, the top priority of interaction is that it must be safe to work with the robot,” the researchers stated.

With simpler levels of human-robot interaction, human safety was assured by keeping the workspace of the robot separate from the human. But in a more complex human-robot environment, any rules for safety must apply to side-by-side work.

For robots to work in parallel with humans, “safety must govern the collaborative decision-making processes and undertaking of shared duties between humans and robots,” the researchers noted.

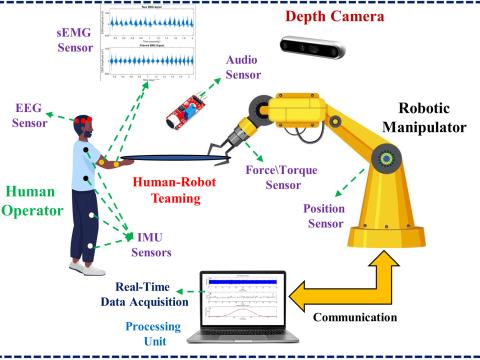

In addition, sophisticated robotic interfaces must enable effective interactions for successful side-by-side work—especially in noisy and complex environments. A combination of machine learning, sophisticated sensors, actuation, robot perception, predictive modeling and human intention detection is needed, the scholars said.

The sensor ecosystem, especially, has evolved from simple computer vision and lidar sensor combinations to include multimodal sensors that capture data from multiple channels, such as cameras for vision, microphones for auditory input and environmental sensors for capturing physical parameters of the environment.

“Sensors for human-robot teaming are the sensory backbone of collaborative robotic systems,” the researchers emphasized. “As this field continues to advance, the development and integration of increasingly sophisticated sensors will play a pivotal role in realizing the full potential of human–robot teams across industries such as healthcare, manufacturing, search and rescue, and autonomous transportation. ... Their significance lies in their capacity to amalgamate data from disparate sensor modalities, thereby facilitating astute decision-making and adept coordination with both humans and fellow robots.”

For instance, modern communication sensors are needed for wireless real-time data interchange, networking and team coordination through Wi-Fi, Bluetooth, 5G and someday 6G connectivity.

“Effective communication forms the bedrock of human–robot teaming, and these sensors engender the seamless flow of information between robots and human team members, fostering coordinated actions and shared situational awareness,” the researchers noted.

Position and localization sensors—including GPS, inertial measuring units and encoders—are crucial to providing the precise location and orientation of robots within their teaming environment, supporting any tasks that involve navigation, path planning and spatial relationship collaboration.

Human-robot teaming also requires sensors that provide object recognition through advanced computer vision and image processing to “see” and track objects within a robot’s field of view. Also, human biometric sensors can be integrated with robots to assess the well-being and physiological states of their human counterparts, including heart rate monitors, electroencephalograms and other wearable health devices.

“Monitoring human biometrics assumes primordial significance in applications where human safety and well-being occupy the forefront,” the researchers observed. “These sensors empower robots to take proactive measures in response to human stress, fatigue, or health-related conditions, ensuring the well-being of the human team members.”

In tight spatial conditions, safety sensors—including bumpers, laser scanners, emergency stop buttons—can be used to detect and respond to unforeseen obstacles, hazards or precarious conditions. “They are indispensable in upholding the safety of human team members and precluding incidents, collisions, or accidents,” the scholars continued. “These sensors endow the robot with the capability to react promptly to unanticipated situations, thereby ensuring a secure working environment.”

Additionally, when needed, environmental sensors meticulously monitor the physical parameters of the environment with temperature, gas, humidity and atmospheric pressure sensors. “In teaming scenarios, these sensors are particularly significant, especially when robots are deployed in environments marked by diversity or hazard,” the scholars shared. “They inform decision-making, ensure safety, and aid in adapting to fluctuating environmental conditions.”

Safety must govern the collaborative decision-making processes and undertaking of shared duties between humans and robots.

Such sensor-equipped robots will be nothing if humans cannot trust their machine counterparts, however.

Ali Shafti, head of Human-Machine Understanding at Cambridge Consultants, a part of Capgemini Invent, ventured that we humans are not quite ready.

“Working with machines still feels too much like a one-sided relationship where robotic systems are more like tools than teammates,” Shafti stated in an October 2 report. This, in turn, is keeping us from leveraging full autonomy.

“Machines are rarely trusted to work in complete isolation,” he said. “Technological, infrastructural, ethical, safety, or regulatory limitations require humans to remain an active part of the task, or to at least keep autonomous systems in check. These constraints mean the shift to full automation often remains out of reach, and we are left working in a state of semi-autonomy.”

For those seeking to move to the next level of teaming and autonomy, Shafti advised organizations to adopt and improve autonomy collaboration skills. He also noted that life-like robots may be more accepted by their human counterparts.

“The next wave of human-robot teaming builds on the legacy of cobots, which introduced mechanical versatility and safety features,” he explained. “Humanoid [robots] extend this value by adding a human-like form factor and interaction model, enabling them to operate in environments designed for people without costly redesigns.”

With humanoid robots using tools built for human hands and communicating through familiar gestures and language, Shafti sees an acceptance and reduced friction that could foster intuitive collaboration between humans and robots. And with trust and familiarity, productivity can be boosted, depending on the level of human-centric interactions.

Workforce shortages and operational pressures are pushing further adoption, Shafti continued. But businesses do need to have solutions that integrate into existing human-centric environments without major operational or redevelopment costs.

“Humanoids and physically intelligent systems meet this need by blending into current workflows, reducing barriers to deployment while enabling deeper human collaboration,” Shafti stated.

He is already seeing companies that are harnessing human-robot collaborations successfully achieving operational gains, especially in the manufacturing sector—such as transitioning from several full-time operators per shift to one or two operators that manage multiple autonomous systems while maintaining the same level of productivity.

“Rather than being reactive, the human-machine understanding-enabled future is about the emergence of a reliable, proactive technological teammate who augments rather than replaces his talented humans,” Shafti said.

He reassured us humans that, even in fully collaborative human-machine environments, humans will continue to provide context, nuances, knowledge, collaboration and adaptability that machines lack.

Comments