Robots Ride Brain Waves of Innovation

Scientists are on the verge of breakthroughs in developing technology for controlling robots with brain waves. Advances might one day allow intuitive and instantaneous collaboration between man and machine, which could benefit a wide array of fields, including the military, medicine and manufacturing.

The possibilities for brain-controlled robotic systems are practically limitless. Experts suggest the capability could allow users to operate unmanned vehicles, wheelchairs or prosthetic devices. It could permit robots to lift hospital patients or carry wounded warriors to safety. Factory robots could more efficiently crank out jet fighters or virtually any other product.

Researchers at the Massachusetts Institute of Technology (MIT) recently announced an advance that allows humans wearing an electroencephalogram (EEG) cap, which detects and records the electrical activity of the brain, to immediately correct a robot performing the rather simple function of sorting objects. If the robot is about to make a wrong choice and the human recognizes the mistake, then the brain instantly sends out signals known as error-related potentials (ErrPs), and that is all it takes for the robot to correct its error. “As you watch the robot, all you have to do is mentally agree or disagree with what it is doing,” says Daniela Rus, director of MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), in a statement. “You don’t have to train yourself to think in a certain way. The machine adapts to you and not the other way around.”

MIT created the feedback system for machine-learning algorithms that can classify brain waves within 30 milliseconds, sometimes within 10. A team is developing the system using a humanoid robot named Baxter from Rethink Robotics, the company led by former CSAIL director and iRobot co-founder Rodney Brooks.

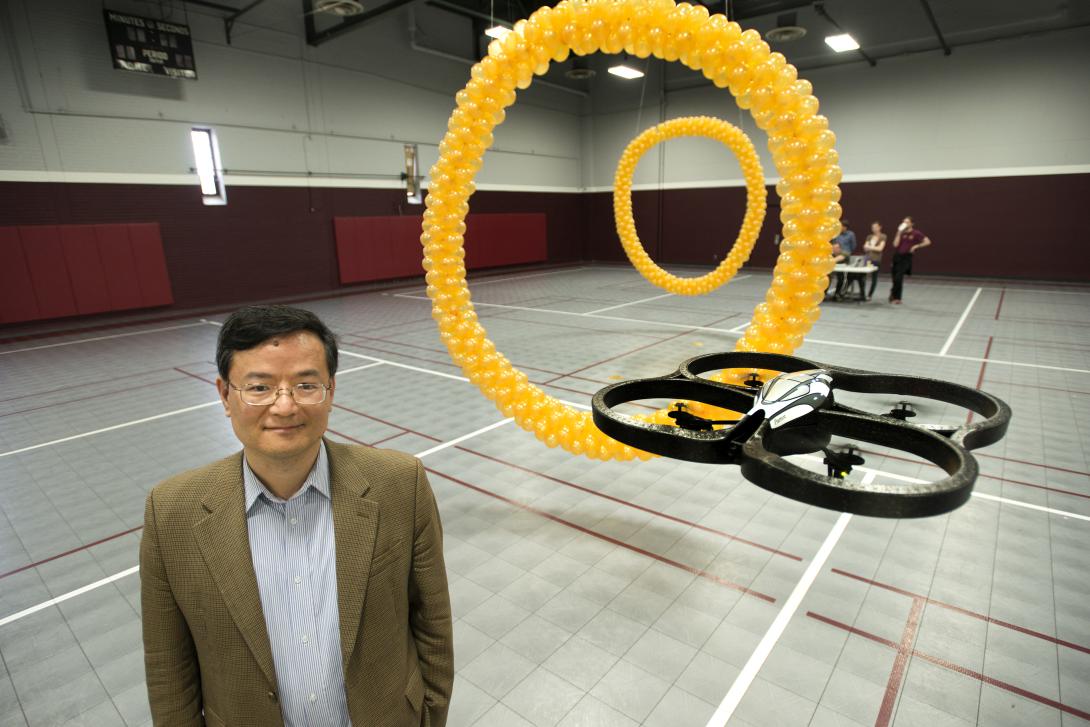

The research being done at MIT and at Boston University is funded in part by the National Science Foundation (NSF) and the Boeing Company. Boeing is focused for now on the potential for brain-controlled robots to do the heavy lifting during assembly processes. “Humans are very good in thinking, creating and understanding processes, but robots can be very good at handling heavy pieces,” says Branko Sarh, a Boeing research and technology senior technical fellow who focuses on automation. “The idea would be that we would, in the future, if everything goes fine, use these technologies in the factory.”

In the next couple of years, the MIT-Boston University team will be required to demonstrate to Boeing that a brain-controlled robot can help in the assembly of a plane. The system will need to pick up a large fitting and move it to the wing box, a structural component from which the plane’s wings extend. “If the robot makes a wrong turn or bumps the fitting against the wing box, you will see a peak in the brain wave activity, and those signals will be used to correct the activities of the robot,” Sarh reveals.

When asked when the technology might be mature enough to be used, he says it depends on how much budget and effort and energy are being spent.

“If you’re putting enough money behind it, in a few years, this could be a reality,” Sarh says, suggesting that the medical community may benefit first.

He adds that maturing the technology will require spending “millions and millions.” Sarh politely declines to discuss how much Boeing is spending on the research.

The NSF supports the MIT-Boston University effort with a graduate research grant through its Graduate Research Fellowship Program. Howard Wactlar, acting director of the NSF’s Division of Information and Intelligent Systems, says brain wave research has broad implications and could result in thought-controlled devices for soldiers, surgeons and others.

Wactlar also indicates that scientists may be at a pivotal point for advances in the next year or so. “I think we will see some major milestones in the development of surface interpretation of brain signals,” he says, explaining that the science is now well-understood. “Once we solve the science, engineers can eventually figure it out.”

The MIT-Boston University endeavor complements work being done as part of the National Robotics Initiative, which began in 2011. The program focuses largely on collaborative robots, also known as cobots. The NSF, the Defense Department, NASA, the Agriculture Department and the National Institutes of Health all participate. “One of the biggest areas is what we call human-robotic interaction—how the robot and human work together, how they communicate with one another,” Wactlar explains. “One does not necessarily think of a robot as a human substitute but as an asset, an assistant device.”

He cites as examples someone working in space or underwater to “fix or repair or adjust something” and in need of a third hand. Under such conditions, other forms of communication, such as verbal commands, are impossible. “They may really need somebody else to hold something in place or to help orient an object in a particular way. We call that kind of robot an asymmetric-dependent robot, where it has to work cooperatively with your other limbs,” he says, explaining that the robotic third hand does not need to be attached to the user.

Furthermore, future soldiers might be more attuned to the myriad electronic devices for situational awareness, communication and other capabilities. “The modern warfighter is strapped with all kinds of equipment and devices,” Wactlar says, adding that he does not know how anyone manages to monitor it all. “Being able to have more brain interaction with these various devices is very valuable [considering] the complex tasks that warfighters are up against these days.”

Wactlar suggests that future warfighters could even be connected to computerized weapons. “A human trying to sight a rifle is probably less accurate than a camera and a computer attached to a rifle,” he says. “You could look at an object and convey to the rifle that you want to direct it toward something you are looking at. Who knows what the next version of a gun will be?”

The suggestion sounds like science fiction, but science fiction today often becomes fact tomorrow. The U.S. Army Research Laboratory also is exploring the possibility of using thought waves to control wearable devices and robotic systems. Army researchers envision the possibility of creating a combat helmet capable of reading these faint electrical signals. If those signals can be detected, classified and communicated, then they may be used to control military systems or to communicate with man and machine.

Using EEG caps, however, does have its limitations. The caps have to be trained to a particular user each time, in part because they will not be positioned exactly the same every time. Also, every person’s brain waves are different. For example, if two people try to come up with a word to describe an object, their brains will have that word stored in different sections and retrieve it using different pathways. And if those same two people try to throw a ball at the same spot on a wall, their brains will accomplish the task in different ways, Wactlar reports.

The EEG cap for the Baxter experiment used 48 electrodes. By comparison, brain-controlled robotic prosthetics can depend on 200 electrodes implanted directly into the brain, where signals are stronger. “That’s how they control an artificial arm—by interpreting signals closer to the surface of the brain,” Wactlar says.

Some researchers are developing EEG caps with 10,000 electrodes for improved performance, but the extra sensors come with a downside, he adds. “If you’re working with 10,000 nodes, it would already be 50 watts of power that’s required, so that’s not so easy to carry around,” Wactlar states. “The research needs to go quite a bit further in terms of interpreting those attenuated signals, and by combining many more of them, being able to make some sense of what it reads.”

The engineers, he says, will need to overcome power issues and other challenges. He is confident they can do it. “I think we’ll start to see [EEG] caps that can be quickly trained and usable for controlling other devices,” he predicts. “It’s possible we’ll see some results in a short enough time frame to make a difference.”

Past work in EEG-controlled robotics has required training humans to think in a way that computers recognize. For example, an operator might have to look at one of two bright light displays, each of which corresponds to a different task for the robot to execute. But the training process and the act of modulating thoughts can be taxing, particularly for people who supervise tasks demanding intense concentration.

“You can’t expect a warfighter to start thinking very hard about what he or she wants the robot to do. They still need to be fully aware of their own situation, their own context. They can’t devote all of their brainpower to controlling a robot,” Wactlar points out. “We need to really see the limits of what the human is capable of.”

Comments