Navy Artificial Intelligence Aids Actionable Intelligence

To ease the load on weary warfighters inundated with too much information, U.S. Navy scientists are turning to artificial intelligence and cognitive reasoning technologies. Solutions that incorporate these capabilities could fill a broad array of roles, such as sounding the alarm when warfighters are about to make mistakes.

The well-worn phrase “the right data to the right person at the right time” is almost cliché in the U.S. Defense Department, but it is a mantra researchers take seriously at the Navy Center for Applied Research in Artificial Intelligence (NCARAI), Washington, D.C. According to Alan Schultz, NCARAI director, the challenge for scientists is to extract the relevant information so that individuals do not have to look at every bit of data flowing in, turning information into actionable intelligence. “One of the biggest challenges we have is this deluge of raw data. We have lots of unmanned systems, lots of new sensors from satellites down to underwater sensors, and they’re producing a ton of data,” Schultz exclaims. “In many cases, the data can’t be looked at. There aren’t enough people sitting in front of all the video that comes in.”

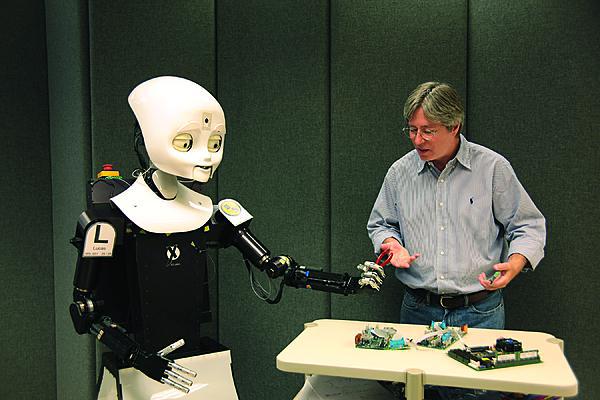

Despite the center’s name, researchers there study both artificial intelligence and cognitive science—the study of the mind and its processes. The two areas of science are closely related. “Some of our big priorities relate to understanding human reasoning, so it can be applied to creating better decision aids and intelligent systems. Emphasis is on reducing warfighter overload, to create tools that will allow them to focus on the task at hand and not on using the tools themselves,” Schultz indicates.

NCARAI scientists have seen great success with predicting and preventing errors. The research initially focused on “post-completion errors.” Schultz illustrates with common examples: forgetting to put the gas cap on after filling the car’s tank; leaving an original document in the copier; or leaving the bank card in an old-style automated teller machine. The research has since broadened into examining other kinds of errors such as checklists, distractions and interruptions. “Even really well-trained warfighters—or any expert—still can make mistakes. It may not happen often, but when it does, the results can be catastrophic,” Schultz declares. “The source of these errors is usually fatigue, or they get overloaded. They get interrupted, for example. That’s a big problem.” He cites one investigation by the National Transportation Safety Board that found that a pilot had been interrupted while going through his checklist and failed to set the flaps properly before takeoff. “The jet was lost, and a lot of people died because of this one error,” Schultz declares.

It turns out that many military systems’ designs tend to induce relatively high rates of post-completion errors; however, by studying how human memory works, scientists came up with a solution. “Using models of human memory plus modern devices like eye trackers, you can have a workstation tracking where humans are looking at the display, and if they aren’t looking at a relevant thing close to the right time, we can predict they’re going to forget that step, and we can put up a just-in-time alert.” Some people may question why the alert wouldn’t flash continually, but of course users simply would ignore it over time, he says.

“This was basic research for a few years, and the scientist actually built a model that turned out to be very robust and replicated it across many different tasks, many tactical interfaces of a computer and, with the same model, was able to very often predict when the person was about to make a mistake. If they were up to, for example, a 75 percent or 80 percent chance of forgetting to do something, you could put up a just-in-time cue,” Schultz explains.

During one study, participants were deliberately distracted or interrupted to induce them to make mistakes. “We reduced the error rates from 14 percent down to 2 percent.” The results, he says, are directly applicable to the military, particularly in cases where warfighters are scanning for data.

For example, at some point in the future a single person might control multiple unmanned vehicles and will scan a monitor to remain abreast of the situation. Schultz hypothesizes that one unmanned vehicle might need to be steered clear of a threat zone at the same time that others are sending in full-rate video of potential targets. With so many tasks required at once, the human controller easily could miss something critical. “Well-trained people scan across the display, always checking things even when they’re busy doing other things. But under high workloads, that tracking or scanning behavior can break down. This technology has been shown to work in those situations.”

The NCARAI team is preparing to begin experiments more focused on military-relevant systems, but the technology so far has proved reliable under a broad array of circumstances. “Everyone on the operational side that we’ve briefed about this technology is very gung ho about it. This is technology that within a year or so could be fielded, with the application of appropriate research funds,” Schultz states.

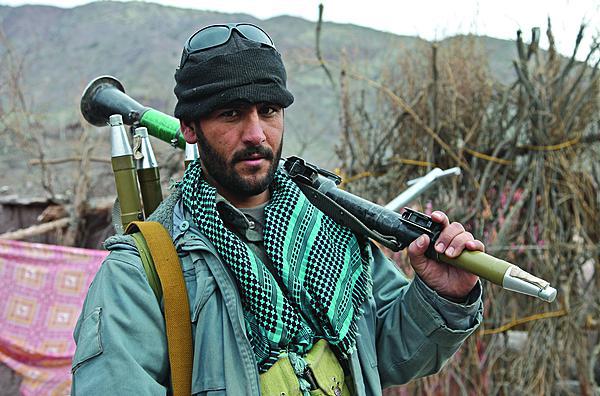

Other research areas may lead to robots and unmanned vehicles intelligent enough to identify individual weapon systems such as rocket-propelled grenades. For example, the Trinocular Structured Light System is designed to provide real-time protection of moving convoys from attack by ambush with small arms. NCARAI scientists intend to develop a 3-D machine vision system capable of detecting weapons by shape to provide an effective means of detecting visible weapons before they are fired. The imaging system consists of three steerable cameras and a special projector emitting a fine, irregular pattern of bright spots. The cameras detect and process each spot to produce a 3-D point in space. It is expected to function at a distance greater than 100 meters (328 feet) and to scan most of the visible area.

“It allows you to collect very fast 3-D range images and to identify things pretty much in real time,” Schultz reports. “The applications for this include the ability to identify things like rocket-propelled grenade launchers potentially even faster than humans might. You might have a system like this on an unmanned platform, or even on a manned personnel transport.”

Prototype sensors and the underlying algorithms already have been built, and the technology could transition out of the laboratory within a few years, assuming appropriate funding is available. Additionally, the technology could have much broader applications, including identifying improvised explosive devices or unexploded ordnance, as well as allowing robots to better recognize objects near their location.

NCARAI scientists also study swarm intelligence, which could provide new ways of controlling large numbers of unmanned systems. Multiple autonomous or semi-autonomous systems can provide data on the same target area from different perspectives. “If you have one vehicle looking at something, you have some information, but if you have two vehicles looking at something from different points of view, you get a lot more information,” Schultz explains.

Artificial intelligence also could help warfighters at checkpoints. By studying speech patterns of people for whom English is not a native language, researchers have come up with software that can pinpoint a person’s origins. Using an example of U.S. speech patterns, Schultz points out that southerners are identifiable because the words “pen” and “pin” are indistinguishable. “You can imagine having a handheld device where someone pulls into a checkpoint, and the warfighter asks a set of questions with the person speaking into the device. The person says he’s from this village, and the device says he’s really from a clan from a different area,” Schultz explains.

The NCARAI, part of the Naval Research Laboratory, has been involved in both basic and applied research in artificial intelligence, human factors and human-centered computing since its inception in 1981. “Back in the late 1980s, early 1990s, we were pushing autonomous systems as an area that would be very important for the Defense Department, and now it is recognized as being very important. It took a while for the rest of the department to see that,” Schultz contends.

He points out some of the broader strides in artificial intelligence, such as “lane keeping” technologies and collision avoidance systems now available in some commercial automobiles, to illustrate how much has changed. “A scientist is not doing his job if he is never surprised by the results,” he says.

Comments